Getting Started with R and RStudio

Overview

Teaching: 20 min

Exercises: 10 minQuestions

How to find your way around RStudio?

How to start and organize a new project from RStudio

How to put the new project under version control and integrate with GitHub?

Objectives

To gain familiarity with the various panes in the RStudio IDE

To gain familiarity with the buttons, short cuts and options in the RStudio IDE

To understand variables and how to assign to them

To be able to manage your workspace in an interactive R session

To be able to create self-contained projects in RStudio

To be able to use git from within RStudio

Motivation

Science is a multi-step process: once you’ve designed an experiment and collected data, the real fun begins (but remember R.A.Fisher)! Here we will explore R as a tool to organize raw data, perform exploratory analyses, and learn how to plot results graphically. But to start, we will review how to interact with R and use the RStudio.

Before Starting This Lesson

Please ensure you have the latest version of R and RStudio installed on your machine. This is important, as some packages may not install correctly (or at all) if R is not up to date.

Download and install the latest version of R here

Download and install RStudio here

Introduction to RStudio

Throughout this lesson, we’ll be using RStudio: a free, open source R integrated development environment. It provides a built in editor, works on all platforms (including on servers) and supports many features useful for working in R: syntax highlighting, quick access to R’s help system, plots visible alongside code, and integration with version control.

How to use R?

Much of your time in R will be spent in the R interactive

console. This is where you will run all of your code, and can be a

useful environment to try out ideas before adding them to an R script

file. This console in RStudio is the same as the one you would get if

you typed in R in your command-line environment.

The first thing you will see in the R interactive session is a bunch of information, followed by a “>” and a blinking cursor. In many ways this is similar to the shell environment you learned about during the unix lessons: it operates on the same idea of a “Read, evaluate, print loop”: you type in commands, R tries to execute them, and then returns a result.

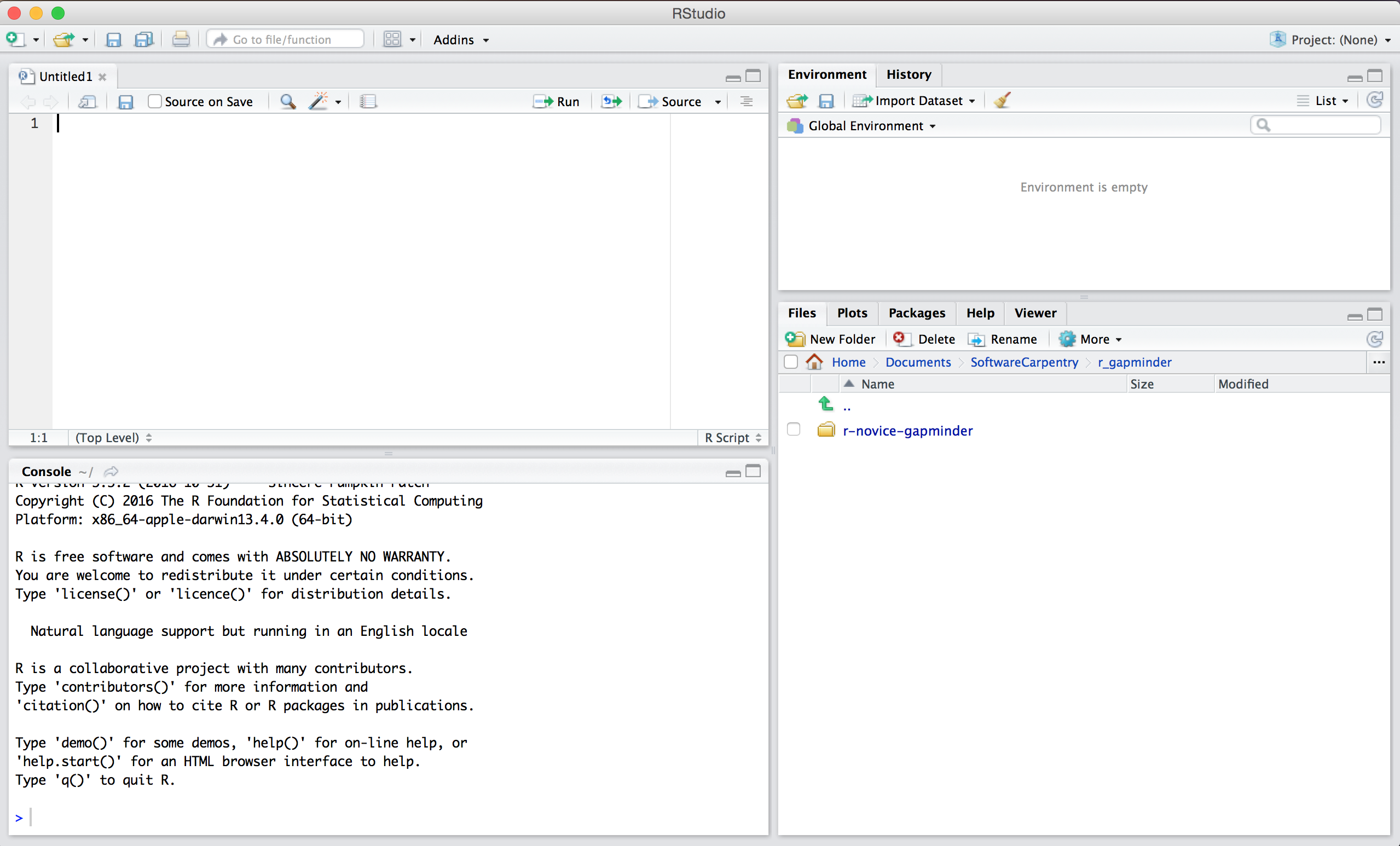

Work flow within RStudio

There are three main ways one can work within RStudio.

- Test and play within the interactive R console then copy code into

a .R file to run later.

- This works well when doing small tests and initially starting off.

- You can use the history panel to copy all the commands to the source file.

- Start writing in an .R file and use RStudio’s command / short cut

to push current line, selected lines or modified lines to the

interactive R console.

- This works well if you are more advanced in writing R code;

- All your code is saved for later

- You will be able to run the file you create from within RStudio

or using R’s

source()function.

- Better yet, you can write your code in R markdown file. An R Markdown file allows you to incorporate your code in a narration that a reader needs to understand it, run it, and display your results. You can use multiple languages including R, Python, and SQL.

Tip: Creating an R Markdown document

You can create a new R Markdown document in RStudio with the menu command File -> New File -> RMarkdown_. A new windows opens and asks you to enter title, author, and default output format for your R Markdown document. After you enter this information, a new R Markdown file opens in a new tab.

Notice that the file contains three types of content:

- An (optional) YAML header surrounded by —s;

- R code chunks surrounded by ```s;

- text mixed with simple text formatting.

You can use the “Knit” button in the RStudio IDE to render the file and preview the output with a single click or keyboard shortcut (Shift + Cmd + K). Note that you can quickly insert chunks of code into your file with the keyboard shortcut Ctrl + Alt + I (OS X: Cmd + Option + I) or the Add Chunk command in the editor toolbar. You can run the code in a chunk by pressing the green triangle on the right. To run the current line, you can:

- click on the

Runbutton above the editor panel, or- select “Run Lines” from the “Code” menu, or

- hit Ctrl-Enter in Windows or Linux or Command-Enter on OS X.

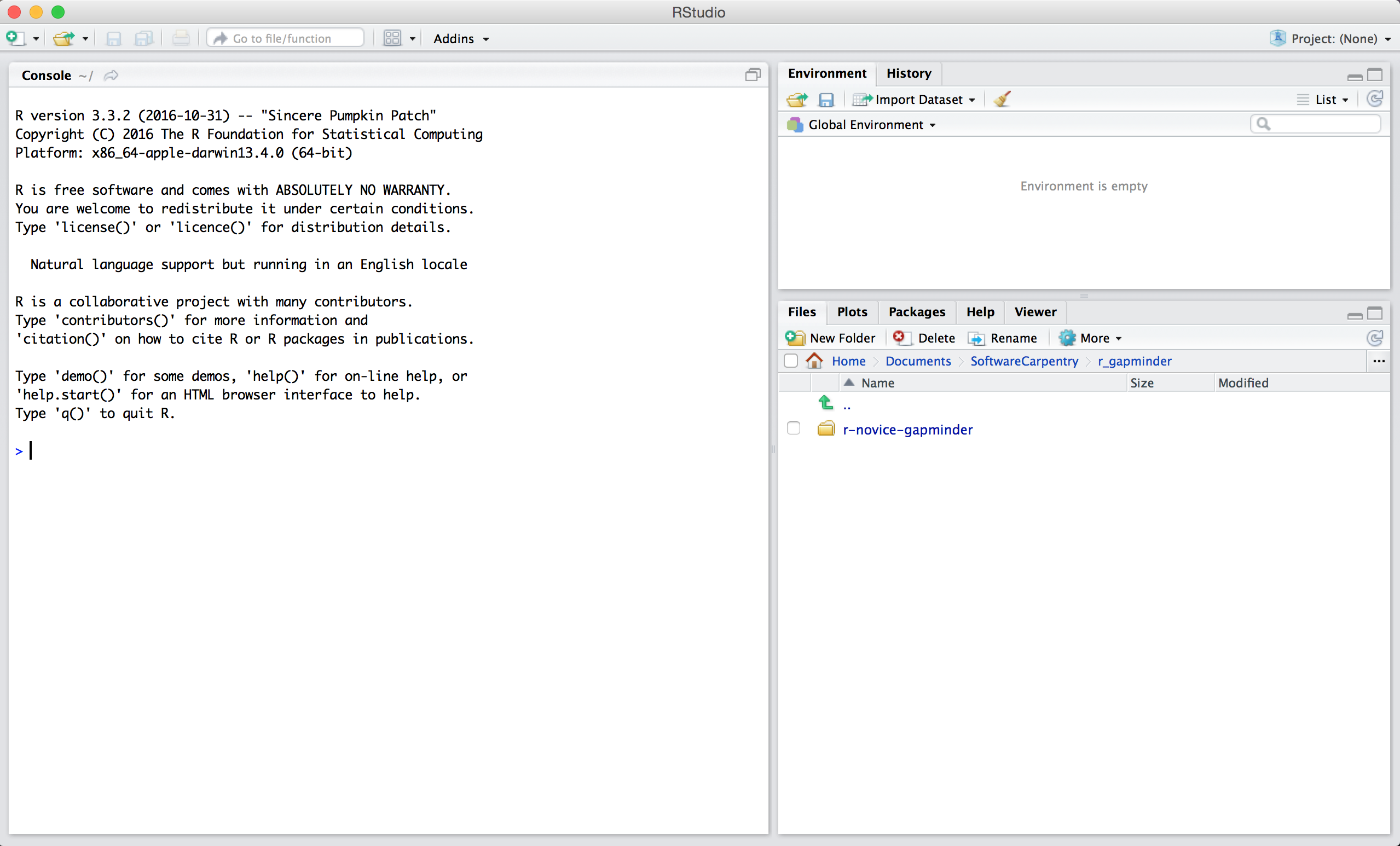

Basic layout

When you first open RStudio, you will be greeted by three panels:

- The interactive R console (entire left)

- Environment/History (tabbed in upper right)

- Files/Plots/Packages/Help/Viewer (tabbed in lower right)

Once you open files, such as R scripts, an editor panel will also open in the top left.

Tip: Do not save your working directory

When you exit R/RStudio, you probably get a prompt about saving your workspace image - don’t do this! In fact, it is recommended that you turn this feature off so you are not tempted: Go to Tools > Global Options and click “Never” in the dropdown next to “Save workspace to .RData on exit”

Creating a new project

One of the most powerful and useful aspects of RStudio is its project management functionality. We’ll be using this today to create a self-contained, reproducible project.

Challenge: Creating a self-contained project

We’re going to create a new project in RStudio:

- Click the “File” menu button, then “New Project”.

- Click “New Directory”.

- Click “Empty Project”.

- Type in the name of the directory to store your project, e.g. “EEOB546_R_lesson”.

- Make sure that the checkbox for “Create a git repository” is selected.

- Click the “Create Project” button.

Now when we start R in this project directory, or open this project with RStudio, all of our work on this project will be entirely self-contained in this directory.

Best practices for project organization

There are several general principles to adhere to that will make project management easier:

Treat data as read only

This is probably the most important goal of setting up a project. Data is typically time consuming and/or expensive to collect. Working with them interactively (e.g., in Excel) where they can be modified means you are never sure of where the data came from, or how it has been modified since collection. It is therefore a good idea to treat your data as “read-only”.

Data Cleaning

In many cases your data will be “dirty”: it will need significant preprocessing to get into a format R (or any other programming language) will find useful. This task is sometimes called “data munging”. It can be useful to store these scripts in a separate folder, and create a second “read-only” data folder to hold the “cleaned” data sets.

Treat generated output as disposable

Anything generated by your scripts should be treated as disposable: you should be able to regenerate it from your scripts.

Tip: Good Enough Practices for Scientific Computing

Good Enough Practices for Scientific Computing gives the following recommendations for project organization:

- Put each project in its own directory, which is named after the project.

- Put text documents associated with the project in the

docdirectory.- Put raw data and metadata in the

datadirectory, and files generated during cleanup and analysis in aresultsdirectory.- Put source for the project’s scripts and programs in the

srcdirectory, and programs brought in from elsewhere or compiled locally in thebindirectory.- Name all files to reflect their content or function.

Separate function definition and application

When your project is new and shiny, the script file usually contains many lines of directly executed code. As it matures, reusable chunks get pulled into their own functions. It’s a good idea to separate these into separate folders; one to store useful functions that you’ll reuse across analyses and projects, and one to store the analysis scripts.

Tip: avoiding duplication

You may find yourself using data or analysis scripts across several projects. Typically you want to avoid duplication to save space and avoid having to make updates to code in multiple places.

For this, you can use “symbolic links”, which are essentially shortcuts to files somewhere else on a filesystem. On Linux and OS X you can use the

ln -scommand, and on Windows you can either create a shortcut or use themklinkcommand from the windows terminal.

Challenge 1

Create a new readme file in the project directory you created and and some information about the project.

Version Control

We also set up our project to integrate with git, putting it under version control. RStudio has a nicer interface to git than shell, but is somewhat limited in what it can do, so you will find yourself occasionally needing to use the shell. Let’s go through and make an initial commit of our template files.

The workspace/history pane has a tab for “Git”. We can stage each file by checking the box: you will see a green “A” next to stage files and folders, and yellow question marks next to files or folders git doesn’t know about yet. RStudio also nicely shows you the difference between files from different commits.

Tip: versioning disposable output

Generally you do not want to version disposable output (or read-only data). You should modify the

.gitignorefile to tell git to ignore these files and directories.

Challenge 2

- Create a directory within your project called

graphs.- Modify the

.gitignorefile to containgraphs/so that this disposable output isn’t versioned.Add the newly created folders to version control using the git interface.

Solution to Challenge 2

This can be done with the command line:

$ mkdir graphs $ echo "graphs/" >> .gitignore

Now we want to push the contents of this commit to GitHub, so it is also backed-up off site and available to collaborators.

Challenge 3

- In GitHub, create a New repository, called here

BCB546-R-Exercise. Don’t initialize it with a README file because we’ll be importing an existing repository…- Make sure you have a proper public key in your GitHub settings

- In RStudio, again click Tools -> Shell … . Enter:

$ echo "# BCB546-R-Exercise" >> README.md $ git add README.md $ git commit -m "first commit" $ git remote add origin git@github.com/[path to your directory] $ git push -u origin master

- Change README.md file to indicate the changes you made

- Commit and push it from the Git tab on the Environment/history panel of Rstudio.

Key Points

Use RStudio to create and manage projects with consistent layout.

Treat raw data as read-only.

Treat generated output as disposable.

Separate function definition and application.

Use version control.

R Language Basics

Overview

Teaching: 30 min

Exercises: 20 minQuestions

How to do simple calculations in R?

How to assign values to variables and call functions?

What are R’s vectors, vector data types, and vectorization?

How to install packages?

How can I get help in R?

What is an R Markdown file and how can I create one?

Objectives

To understand variables and how to assign to them

To be able to use mathematical and comparison operations

To be able to call functions

To be able to find and use packages

To be able to read R help files for functions and special operators.

To be able to seek help from your peers.

To be able to create and use R Markdown files

Introduction to R

R Operators

The simplest thing you could do with R is arithmetic:

1 + 100

[1] 101

Here we used to numbers and a + sign, which is called an operator.

An operator is a symbol that tells the compiler to perform specific mathematical

or logical manipulations.

Arithmetic operators

There are several arithmetic operators we can use and they should look familiar to you. If you use several of them (e.g., `5 + 2 *3), the order of operations is the same as you would have learned back in school. The list below is arranged from highest to lowest precedence:

Arithmetic operations

Operator Description ( ) Parentheses ^or**Exponents /Divide *Multiply -Subtract +Add Examples

3 + 5 * 2^5[1] 163Use parentheses to group operations in order to force the order of evaluation if it differs from the default, or to make clear what you intend.

((3 + 5) * 2)^5[1] 1048576Really small or large numbers get a scientific notation:

2/10000[1] 2e-04You can write numbers in scientific notation too:

5e3[1] 5000

Like bash, if you type in an incomplete command, R will wait for you to

complete it. Any time you hit return and the R session shows a + instead of a

>, it means it’s waiting for you to complete the command. If you want to cancel

a command you can simply hit “Esc” and RStudio will give you back the >

prompt.

Tip: Cancelling commands

If you’re using R from the commandline instead of from within RStudio, you need to use

Ctrl+Cinstead ofEscto cancel the command. This applies to Mac users as well!Cancelling a command isn’t only useful for killing incomplete commands: you can also use it to tell R to stop running code (for example if its taking much longer than you expect), or to get rid of the code you’re currently writing.

Relational Operators

R also has relational operators that allow us to do comparison:

R Relational Operators

Operator Description < Less than > Greater than <= Less than or equal to >= Greater than or equal to == Equal to != Not equal to Examples

1 == 1; # equality (note two equals signs, read as "is equal to")[1] TRUE1 != 1; # inequality (read as "is not equal to")[1] FALSE1 < 2; # less than[1] TRUE# etc

Comparing Numbers

Let’s compare

0.1 + 0.2and0.3. What do you think?0.1 + 0.2 == 0.3[1] FALSEWhat’s happened?

Computers may only represent decimal numbers with a certain degree of precision, so two numbers which look the same when printed out by R, may actually have different underlying representations and therefore be different by a small margin of error (called Machine numeric tolerance). So, unless you compare two integers, you should use theall.equalfunction:all.equal(0.1 + 0.2, 0.3)[1] TRUEFurther reading: http://floating-point-gui.de/

Other operators

There are several other types of operators:

- Logical Operators

- Assignment Operators

- Miscellaneous Operators

We will see some of them in the next section and talk more about them in latter lessons (but see here if you can’t wait). Before we do it, we need to introduce variables.

Variables and assignment

A variable provides us with named storage that our programs can manipulate.

We can store values in variables using the assignment operator <-:

x <- 1/40

Notice that assignment does not print a value. Instead, we stored it for later

in something called a variable. x now contains the value 0.025:

x

[1] 0.025

Look for the Environment tab in one of the panes of RStudio, and you will see

that x and its value have appeared. Our variable x can be used in place of a

number in any calculation that expects a number:

x^2

[1] 0.000625

Notice also that variables can be reassigned:

x <- 100

x used to contain the value 0.025 and and now it has the value 100.

Assignment values can contain the variable being assigned to:

x <- x + 1; #notice how RStudio updates its description of x on the top right tab

x

[1] 101

The right hand side of the assignment can be any valid R expression. The right hand side is fully evaluated before the assignment occurs.

Tip: A shortcut for assignment operator

IN RStudio, you can create the <- assignment operator in one keystroke using

Option -(that’s a dash) on OS X orAlt -on Windows/Linux.

It is also possible to use the = operator for assignment:

x = 1/40

But this is much less common among R users. So the recommendation is to use <-.

Naming variables

Variable names can contain letters, numbers, underscores and periods. They cannot start with a number nor contain spaces at all. Different people use different conventions for long variable names, these include

- periods.between.words

- underscores_between_words

- camelCaseToSeparateWords What you use is up to you, but be consistent.

Warning:

Notice, that R does not use a special symbol to distinguish between variable and simple text. Instead, the text is placed in double quotes “text”. If R complains that the object text does not exist, you probably forgot to use the quotes!

Reserved words

Reserved words in R programming are a set of words that have special meaning and cannot be used as an identifier (variable name, function name etc.). To view the list of reserved words you can type

help(reserved)or?reservedat the R command prompt.

Vectorization

One final thing to be aware of is that R is vectorized, meaning that variables and functions can have vectors as values. We can create vectors using several commands:

Vectorization

Command Description c(2, 4, 6)Join elements into a vector 1:5Create an integer sequence seq(2, 3, by=0.5)Create a sequence with a specific increment rep(1:2, times=3)Repeat a vector rep(1:2, each=3)Repeat elements of a vector Examples

1:5;[1] 1 2 3 4 52^(1:5);[1] 2 4 8 16 32x <- 1:5; 2^x;[1] 2 4 8 16 32

This is incredibly powerful; we will discuss this further in an upcoming lesson.

Notice that in the table above we see some functions that can be recognized by

parenthesis that follow one or more letters (e.g., seq(2, 3, by=0.5)). R has

many in-built functions which can be directly called in the program without

defining them first. We can also create and use our own functions referred as

user defined functions. You can see some additional examples of in-build

functions in this handy Cheat Sheet

Managing your environment

There are a few useful commands you can use to interact with the R session.

ls will list all of the variables and functions stored in the global environment

(your working R session):

ls() # to list files use list.files() function

[1] "args" "dest_md" "op" "src_rmd" "x"

Tip: hidden objects

Like in the shell,

lswill hide any variables or functions starting with a “.” by default. To list all objects, typels(all.names=TRUE)instead

Note here that we didn’t given any arguments to ls, but we still

needed to give the parentheses to tell R to call the function.

If we type ls by itself, R will print out the source code for that function!

ls

function (name, pos = -1L, envir = as.environment(pos), all.names = FALSE,

pattern, sorted = TRUE)

{

if (!missing(name)) {

pos <- tryCatch(name, error = function(e) e)

if (inherits(pos, "error")) {

name <- substitute(name)

if (!is.character(name))

name <- deparse(name)

warning(gettextf("%s converted to character string",

sQuote(name)), domain = NA)

pos <- name

}

}

all.names <- .Internal(ls(envir, all.names, sorted))

if (!missing(pattern)) {

if ((ll <- length(grep("[", pattern, fixed = TRUE))) &&

ll != length(grep("]", pattern, fixed = TRUE))) {

if (pattern == "[") {

pattern <- "\\["

warning("replaced regular expression pattern '[' by '\\\\['")

}

else if (length(grep("[^\\\\]\\[<-", pattern))) {

pattern <- sub("\\[<-", "\\\\\\[<-", pattern)

warning("replaced '[<-' by '\\\\[<-' in regular expression pattern")

}

}

grep(pattern, all.names, value = TRUE)

}

else all.names

}

<bytecode: 0x7f9b3d394c38>

<environment: namespace:base>

You can use rm to delete objects you no longer need:

rm(x)

If you have lots of things in your environment and want to delete all of them,

you can pass the results of ls to the rm function:

rm(list = ls())

In this case we’ve combined the two. Like the order of operations, anything inside the innermost parentheses is evaluated first, and so on.

Tip: Warnings vs. Errors

Pay attention when R does something unexpected! Errors, like above, are thrown when R cannot proceed with a calculation. Warnings on the other hand usually mean that the function has run, but it probably hasn’t worked as expected.

In both cases, the message that R prints out usually give you clues how to fix a problem.

R Packages

It is possible to add functions to R by writing a package, or by obtaining a package written by someone else. There are over 7,000 packages available on CRAN (the comprehensive R archive network). R and RStudio have functionality for managing packages:

- You can see what packages are installed by typing

installed.packages() - You can install packages by typing

install.packages("packagename"), wherepackagenameis the package name, in quotes. - You can update installed packages by typing

update.packages() - You can remove a package with

remove.packages("packagename") - You can make a package available for use with

library(packagename)

Writing your own code

Good coding style is like using correct punctuation. You can manage without it, but it sure makes things easier to read. As with styles of punctuation, there are many possible variations. Google’s R style guide is a good place to start. The formatR package, by Yihui Xie, makes it easier to clean up poorly formatted code. Make sure to read the notes on the wiki before using it.

Looking for help

Reading Help files

R, and every package, provide help files for functions. To search for help use:

?function_name

help(function_name)

This will load up a help page in RStudio (or as plain text in R by itself).

Note that each help page is broken down into several sections, including Description, Usage, Arguments, Examples, etc.

Operators

To seek help on operators, use quotes:

?"+"

Getting help on packages

Many packages come with “vignettes”: tutorials and extended example documentation.

Without any arguments, vignette() will list all vignettes for all installed packages;

vignette(package="package-name") will list all available vignettes for

package-name, and vignette("vignette-name") will open the specified vignette.

If a package doesn’t have any vignettes, you can usually find help by typing

help("package-name").

When you kind of remember the function

If you’re not sure what package a function is in, or how it’s specifically spelled you can do a fuzzy search:

??function_name

When you have no idea where to begin

If you don’t know what function or package you need to use CRAN Task Views is a specially maintained list of packages grouped into fields. This can be a good starting point.

When your code doesn’t work: seeking help from your peers

If you’re having trouble using a function, 9 times out of 10,

the answers you are seeking have already been answered on

Stack Overflow. You can search using

the [r] tag.

If you can’t find the answer, there are two useful functions to help you ask a question from your peers:

?dput

Will dump the data you’re working with into a format so that it can be copy and pasted by anyone else into their R session.

sessionInfo()

R version 4.0.4 (2021-02-15)

Platform: x86_64-apple-darwin17.0 (64-bit)

Running under: macOS Catalina 10.15.7

Matrix products: default

BLAS: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRblas.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRlapack.dylib

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] knitr_1.31

loaded via a namespace (and not attached):

[1] compiler_4.0.4 magrittr_2.0.1 tools_4.0.4 stringi_1.5.3 stringr_1.4.0

[6] xfun_0.22 evaluate_0.14

Will print out your current version of R, as well as any packages you have loaded.

Vocabulary

As with any language, it’s important to work on your R vocabulary. Here is a good place to start

Challenge 1

Which of the following are valid R variable names?

min_height max.height _age .mass MaxLength min-length 2widths celsius2kelvinSolution to challenge 1

The following can be used as R variables:

min_height max.height MaxLength celsius2kelvinThe following creates a hidden variable:

.massThe following will not be able to be used to create a variable

_age min-length 2widths

Challenge 2

What will be the value of each variable after each statement in the following program?

mass <- 47.5 age <- 122 mass <- mass * 2.3 age <- age - 20Solution to challenge 2

mass <- 47.5This will give a value of 47.5 for the variable mass

age <- 122This will give a value of 122 for the variable age

mass <- mass * 2.3This will multiply the existing value of 47.5 by 2.3 to give a new value of 109.25 to the variable mass.

age <- age - 20This will subtract 20 from the existing value of 122 to give a new value of 102 to the variable age.

Challenge 3

Clean up your working environment by deleting the mass and age variables.

Solution to challenge 3

We can use the

rmcommand to accomplish this taskrm(age, mass)

Challenge 4

Install packages in the

tidyversecollectionSolution to challenge 4

We can use the

install.packages()command to install the required packages.install.packages("tidyverse")

Challenge 5

Use help to find a function (and its associated parameters) that you could use to load data from a csv file in which columns are delimited with “\t” (tab) and the decimal point is a “.” (period). This check for decimal separator is important, especially if you are working with international colleagues, because different countries have different conventions for the decimal point (i.e. comma vs period). hint: use

??csvto lookup csv related functions.

```

Key Points

R has the usual arithmetic operators and mathematical functions.

Use

<-to assign values to variables.Use

ls()to list the variables in a program.Use

rm()to delete objects in a program.Use

install.packages()to install packages (libraries).Use

library(packagename)to make a package available for useUse

help()to get online help in R.

Data Structures

Overview

Teaching: 50 min

Exercises: 30 minQuestions

What are the basic data types in R?

How do I represent categorical information in R?

Objectives

To be aware of the different types of data.

To begin exploring data frames, and understand how it’s related to vectors, factors and lists.

To be able to ask questions from R about the type, class, and structure of an object.

Disclaimer: This lesson is based on a chapter from the Advanced R book.

Data structures

R’s base data structures can be organized by their dimensionality (1d, 2d, or nd) and whether they’re homogeneous (all contents must be of the same type) or heterogeneous (the contents can be of different types):

R’s base data structures

Homogeneous Heterogeneous 1d Atomic vector List 2d Matrix Data frame nd Array

Almost all other objects are built upon these foundations. Note that R has no 0-dimensional, or scalar types. Individual numbers or strings are vectors of length one.

Given an object, the best way to understand what data structures it’s composed

of is to use str(). str() is short for structure and it gives a compact,

human readable description of any R data structure.

Vectors

The basic data structure in R is the vector. Vectors come in two flavors: atomic vectors and lists. They have three common properties:

- Type,

typeof(), what it is. - Length,

length(), how many elements it contains. - Attributes,

attributes(), additional arbitrary metadata.

They differ in the types of their elements: all elements of an atomic vector must be the same type, whereas the elements of a list can have different types.

Atomic vectors

There are four common types of atomic vectors : logical, integer, double (often called numeric), and character.

Atomic vectors are usually created with c(), short for combine.

dbl_var <- c(1, 2.5, 4.5)

# With the L suffix, you get an integer rather than a double

int_var <- c(1L, 6L, 10L)

# Use TRUE and FALSE (or T and F) to create logical vectors

log_var <- c(TRUE, FALSE, T, F)

chr_var <- c("these are", "some strings")

Atomic vectors are always flat, even if you nest c()’s: Try c(1, c(2, c(3, 4)))

Missing values are specified with NA, which is a logical vector of length 1.

NA will always be coerced to the correct type if used inside c().

Coercion

All elements of an atomic vector must be the same type, so when you attempt to

combine different types they will be coerced to the most flexible type. The

coercion rules go: logical -> integer -> double -> complex -> character,

where -> can be read as are transformed into. You can try to force coercion

against this flow using the as. functions:

Challenge 1

Create the following vectors and predict their type:

a <- c("a", 1) b <- c(TRUE, 1) c <- c(1L, 10) d <- c(a, b, c)Solution to Challenge 1

typeof(a); typeof(b); typeof(c); typeof(d)[1] "character"[1] "double"[1] "double"[1] "character"

Coercion often happens automatically. Most mathematical functions (+, log,

abs, etc.) will coerce to a double or integer, and most logical operations

(&, |, any, etc) will coerce to a logical. You will usually get a warning

message if the coercion might lose information. If confusion is likely,

explicitly coerce with as.character(), as.double(), as.integer(), or

as.logical().

TIP

When a logical vector is coerced to an integer or double,

TRUEbecomes 1 andFALSEbecomes 0. This is very useful in conjunction withsum()andmean(), which will calculate the total number and proportion of “TRUE’s”, respectively.

Lists

Lists are one dimensional data structures that are different from atomic

vectors because their elements can be of any type, including lists. We construct

lists by using list() instead of c():

x <- c(1,2,3)

y <- list(1,2,3)

z <- list(1:3, "a", c(TRUE, FALSE, TRUE), c(2.3, 5.9))

RESULTS

str(x); str(y); str(z)num [1:3] 1 2 3List of 3 $ : num 1 $ : num 2 $ : num 3List of 4 $ : int [1:3] 1 2 3 $ : chr "a" $ : logi [1:3] TRUE FALSE TRUE $ : num [1:2] 2.3 5.9

Lists are sometimes called recursive vectors, because a list can contain other lists. This makes them fundamentally different from atomic vectors.

x <- list(list(list(list())))

str(x)

List of 1

$ :List of 1

..$ :List of 1

.. ..$ : list()

is.recursive(x)

[1] TRUE

c() will combine several lists into one. If given a combination of atomic

vectors and lists, c() will coerce the vectors to lists before combining them.

Example

Compare the results of

list()andc():x <- list(list(1, 2), c(3, 4)) y <- c(list(1, 2), c(3, 4)) str(x); str(y)List of 2 $ :List of 2 ..$ : num 1 ..$ : num 2 $ : num [1:2] 3 4List of 4 $ : num 1 $ : num 2 $ : num 3 $ : num 4

The typeof() a list is list. You can test for a list with is.list() and

coerce to a list with as.list(). You can turn a list into an atomic vector

with unlist(). If the elements of a list have different types, unlist() uses

the same coercion rules as c().

Discussion 1

What are the common types of atomic vector? How does a list differ from an atomic vector?

What will the commands

is.vector(list(1,2,3))is.numeric(c(1L,2L,3L))produce? How abouttypeof(as.numeric(c(1L,2L,3L)))?Test your knowledge of vector coercion rules by predicting the output of the following uses of

c():c(1, FALSE) c("a", 1) c(list(1), "a") c(TRUE, 1L)Why do you need to use

unlist()to convert a list to an atomic vector? Why doesn’tas.vector()work?Why is

1 == "1"true? Why is-1 < FALSEtrue? Why is"one" < 2false?Why is the default missing value,

NA, a logical vector? What’s special about logical vectors? (Hint: think aboutc(FALSE, NA)vs.c(FALSE, NA_character_).)

Attributes

All objects can have arbitrary additional attributes, used to store metadata

about the object. Attributes can be thought of as a named list (with unique names).

Attributes can be accessed individually with attr() or all at once (as a list)

with attributes().

The three most important attributes:

-

Names, a character vector giving each element a name, described in names.

-

Dimensions, used to turn vectors into matrices and arrays, described in matrices and arrays.

-

Class, used to implement the S3 object system.

Each of these attributes has a specific accessor function to get and set values:

names(x), dim(x), and class(x). To see

Names

You can name elements in a vector in three ways:

- When creating it:

x <- c(a = 1, b = 2, c = 3) - By modifying an existing vector in place:

x <- 1:3; names(x) <- c("a", "b", "c") - By creating a modified copy of a vector:

x <- 1:3; x <- setNames(x, c("a", "b", "c"))

You can see these names by just typing the vector’s name. You can access them

by using the names(x) function.

x <- 1:3;

x <- setNames(x, c("a", "b", "c"))

x

a b c

1 2 3

names(x)

[1] "a" "b" "c"

Names don’t have to be unique and not all elements of a vector need to have a name.

If some names are missing, names() will return an empty string for those elements.

If all names are missing, names() will return NULL.

You can create a new vector without names using unname(x), or remove names in

place with names(x) <- NULL.

Factors

One important use of attributes is to define factors. A factor is a vector that

can contain only predefined values, and is used to store categorical data.

Factors are built on top of integer vectors using two attributes: the class(),

“factor”, which makes them behave differently from regular integer vectors, and

the levels(), which defines the set of allowed values.

Examples

x <- c("a", "b", "b", "a") x[1] "a" "b" "b" "a"x <- factor(x) x[1] a b b a Levels: a bclass(x)[1] "factor"levels(x)[1] "a" "b"Factors are useful when you know the possible values a variable may take. Using a factor instead of a character vector makes it obvious when some groups contain no observations:

sex_char <- c("m", "m", "m") sex_factor <- factor(sex_char, levels = c("m", "f")) table(sex_char)sex_char m 3table(sex_factor)sex_factor m f 3 0

Factors crip up all over R, and occasionally cause headaches for new R users.

Matrices and arrays

Adding a dim() attribute to an atomic vector allows it to behave like a

multi-dimensional array. An array with two dimensions is called matrix.

Matrices are used commonly as part of the mathematical

machinery of statistics. Arrays are much rarer, but worth being aware of.

Matrices and arrays are created with matrix() and array(),

or by using the assignment form of dim().

# Two scalar arguments to specify rows and columns

a <- matrix(1:6, ncol = 3, nrow = 2)

# One vector argument to describe all dimensions

b <- array(1:12, c(2, 3, 2))

# You can also modify an object in place by setting dim()

c <- 1:12

dim(c) <- c(3, 4)

c

[,1] [,2] [,3] [,4]

[1,] 1 4 7 10

[2,] 2 5 8 11

[3,] 3 6 9 12

dim(c) <- c(4, 3)

c

[,1] [,2] [,3]

[1,] 1 5 9

[2,] 2 6 10

[3,] 3 7 11

[4,] 4 8 12

dim(c) <- c(2, 3, 2)

c

, , 1

[,1] [,2] [,3]

[1,] 1 3 5

[2,] 2 4 6

, , 2

[,1] [,2] [,3]

[1,] 7 9 11

[2,] 8 10 12

You can test if an object is a matrix or array using is.matrix() and is.array(),

or by looking at the length of the dim(). as.matrix() and as.array() make

it easy to turn an existing vector into a matrix or array.

Discussion 2

What does

dim()return when applied to a vector?If

is.matrix(x)isTRUE, what willis.array(x)return?How would you describe the following three objects? What makes them different to

1:5?x1 <- array(1:5, c(1, 1, 5)) x2 <- array(1:5, c(1, 5, 1)) x3 <- array(1:5, c(5, 1, 1))

Data frames

A data frame is the most common way of storing data in R, and if used systematically makes data analysis easier. Under the hood, a data frame is a list of equal-length vectors. This makes it a 2-dimensional structure, so it shares properties of both the matrix and the list.

Useful Data Frame Functions

head()- shows first 6 rowstail()- shows last 6 rowsdim()- returns the dimensions of data frame (i.e. number of rows and number of columns)nrow()- number of rowsncol()- number of columnsstr()- structure of data frame - name, type and preview of data in each columnnames()- shows thenamesattribute for a data frame, which gives the column names.sapply(dataframe, class)- shows the class of each column in the data frame

Creation

You create a data frame using data.frame(), which takes named vectors as input:

df <- data.frame(x = 1:3, y = c("a", "b", "c"))

str(df)

'data.frame': 3 obs. of 2 variables:

$ x: int 1 2 3

$ y: chr "a" "b" "c"

In R prior to v.4, data.frame()’s default behavior was to turn strings into

factors. Use stringAsFactors = FALSE to suppress this behavior!

df <- data.frame(

x = 1:3,

y = c("a", "b", "c"),

stringsAsFactors = FALSE)

str(df)

'data.frame': 3 obs. of 2 variables:

$ x: int 1 2 3

$ y: chr "a" "b" "c"

Testing and coercion

Because a data.frame is an S3 class, its type reflects the underlying vector

used to build it: the list. To check if an object is a data frame, use class()

or test explicitly with is.data.frame():

Examples

is.vector(df)[1] FALSEis.list(df)[1] TRUEis.data.frame(df)[1] TRUEtypeof(df)[1] "list"class(df)[1] "data.frame"

You can coerce an object to a data frame with as.data.frame():

- A vector will create a one-column data frame.

- A list will create one column for each element; it’s an error if they’re not all the same length.

- A matrix will create a data frame with the same number of columns and rows as the matrix.

Discussion 3

What attributes does a data frame possess?

What does

as.matrix()do when applied to a data frame with columns of different types?Can you have a data frame with 0 rows? What about 0 columns?

Key Points

Atomic vectors are usually created with

c(), short for combine;Lists are constructed by using

list();Data frames are created with

data.frame(), which takes named vectors as input;The basic data types in R are double, integer, complex, logical, and character;

All objects can have arbitrary additional attributes, used to store metadata about the object;

Adding a

dim()attribute to an atomic vector creates a multi-dimensional array;

Data Subsetting

Overview

Teaching: 30 min

Exercises: 10 minQuestions

How can I work with subsets of data in R?

Objectives

To be able to subset vectors, factors, lists, and dataframes

To be able to extract individual and multiple elements: by index, by name, using comparison operations

To be able to skip and remove elements from various data structures.

Disclaimer: This tutorial is partially based on an excellent book Advanced R.. Read chapter 4 to learn how to subset other data structures.

Subsetting

R has many powerful subset operators and mastering them will allow you to easily perform complex operations on any kind of dataset.

There are six different ways we can subset any kind of object, and three different subsetting operators for the different data structures.

Let’s start with the workhorse of R: atomic vectors.

Subsetting atomic vectors

By position

Code Meaning x[4]The fourth element x[-4]All but the forth x[2:4]Elements two to four x[-2:4]All except two to four x[c(1,5)Elements one and five

Important! Vector numbering in R starts at 1

In many programming languages (C and python, for example), the first element of a vector has an index of 0. In R, the first element is 1.

By name

Code Meaning x["b"]An element named “b”

Tip: Duplicated names

Although inexing by name is usually a much more reliable way to subset objects, notice, what will happen if we have several elements named “a”:

y <- c(5.4, 6.2, 7.1, 4.8, 7.5, 6.2) names(y) <- c('a', 'b', 'c', 'a', 'e', 'a') y[c("a", "c")]a c 5.4 7.1We’ll solve this problem in the next section.

By position, value, or name using logical vectors

Code Meaning x[c(T,T,F)]Element at 1, 2, 4, 5, etc. postions x[all.equal(x, 6.2)]Element equal to 6.2 x[x < 3]All elements less than three x[names(x) == "a"All elements with name “a” x[names(x) %in% c("a", "c", "d")All elements with names “a”, “c”, or “d” x[!(names(x) %in% c("a","c","d"))]All elements with names other than “a”, “c”, “d”

Discussion 1

Predict and check the results of the following operations:

x <- c(5.4, 6.2, 7.1, 4.8, 7.5, 6.2) names(x) <- c('a', 'b', 'c', 'd', 'e', 'f') x[-(2:4)] x[-2:4] -x[2:4] x[names(x) == "a"Discuss with your neighbor subsetting using logical values. How does each of these commands work?

x[names(x) == "a"]x[which(names(x) == "a")produce the same results. How do these two commands differ from each other?

Tip: Getting help for operators

Remember you can search for help on operators by wrapping them in quotes:

help("%in%")or?"%in%".

Handling special values

At some point you will encounter functions in R which cannot handle missing, infinite, or undefined data.

There are a number of special functions you can use to filter out this data:

is.nawill return all positions in a vector, matrix, or data.frame containingNA.- likewise,

is.nan, andis.infinitewill do the same forNaNandInf.is.finitewill return all positions in a vector, matrix, or data.frame that do not containNA,NaNorInf.na.omitwill filter out all missing values from a vector

Subsetting lists: three functions: [, [[, and $.

1. Use [...] to return a subset of a list as a list.

If you want to subset a list, but not

extract an element, then you will likely use [...].

Examples

lst <- list(1:3, "a", c(TRUE, FALSE, TRUE), c(2.3, 5.9)) names(lst) <- c("A","B","C","D") lst[1]$A [1] 1 2 3str(lst[1])List of 1 $ A: int [1:3] 1 2 3

2. Use [[...]] to extract an individual element of a list.

You can’t extract more than one element at once nor use [[ ]] to skip elements:

Examples

lst <- list(1:3, "a", c(TRUE, FALSE, TRUE), c(2.3, 5.9)) names(lst) <- c("A","B","C","D") lst[[1]][1] 1 2 3lst[["D"]][1] 2.3 5.9str(lst[[1]])int [1:3] 1 2 3lst[[1:3]]Error in lst[[1:3]]: recursive indexing failed at level 2lst[[-1]]Error in lst[[-1]]: invalid negative subscript in get1index <real>

3. Use $... to extract an element of a list by its name (you don’t need “” for the name):

Example

lst <- list(1:3, "a", c(TRUE, FALSE, TRUE), c(2.3, 5.9)) names(lst) <- c("A","B","C","D") lst$D # The `$` function is a shorthand way for extracting elements by name[1] 2.3 5.9

Discussion 2

What is the difference between these two commands? Did you get the results you expected?

lst <- list(1:3, "a", c(TRUE, FALSE, TRUE), c(2.3, 5.9)) names(lst) <- c("A","B","C","D") # command 1: lst[1][2] # command 2: lst[[1]][2]

Subsetting dataframes

Remember the data frames are lists underneath the hood, so similar rules apply. However they are also two dimensional objects!

Subset columns from a dataset using [...].

Remember the data frames are lists underneath the hood, so similar rules apply.

However, the resulting object will be a data frame:

## Example

df <- data.frame( x = 1:3, y = c("a", "b", "c"), z = c(TRUE,FALSE,TRUE), stringsAsFactors = FALSE) str(df)'data.frame': 3 obs. of 3 variables: $ x: int 1 2 3 $ y: chr "a" "b" "c" $ z: logi TRUE FALSE TRUEdf[1]x 1 1 2 2 3 3

Subset cells from a dataset using [...] with two numbers:

Example

The first argument corresponds to rows and the second to columns (either or both of them can be skipped!):

df[1:2,2:3]y z 1 a TRUE 2 b FALSEIf we subset a single row, the result will be a data frame (because the elements are mixed types):

df[3,]x y z 3 3 c TRUEBut for a single column the result will be a vector (this can be changed with the third argument,

drop = FALSE).df[,3][1] TRUE FALSE TRUE

Extract a single column using [[...]] or $... with the column name:

df$x

[1] 1 2 3

#or

df[["x"]]

[1] 1 2 3

Discussion 3

What is the difference between:

df[,2] df[2] df[[2]]How about

df[3,2] #and df[[2]][3]

Key Points

Access individual values by location using

[].Access slices of data using

[low:high].Access arbitrary sets of data using

[c(...)].Use

whichto select subsets of data based on value.

Data Transformation

Overview

Teaching: 60 min

Exercises: 30 minQuestions

How to use dplyr to transform data in R?

How do I select columns

How can I filter rows

How can I join two data frames?

Objectives

Be able to explore a dataframe

Be able to add, rename, and remove columns.

Be able to filter rows

Be able to append two data frames

Be able to join two data frames

Data transformation

Introduction

Every data analysis you conduct will likely involve manipulating dataframes at

some point. Quickly extracting, transforming, and summarizing information from

dataframes is an essential R skill. In this part we’ll use Hadley Wickham’s

dplyr package, which consolidates and simplifies many of the common operations

we perform on dataframes. In addition, much of dplyr key functionality is

written in C++ for speed.

Preliminaries

Inatall (if needed) and/or load two libraries, tidyverse and tidyr:

Dataset

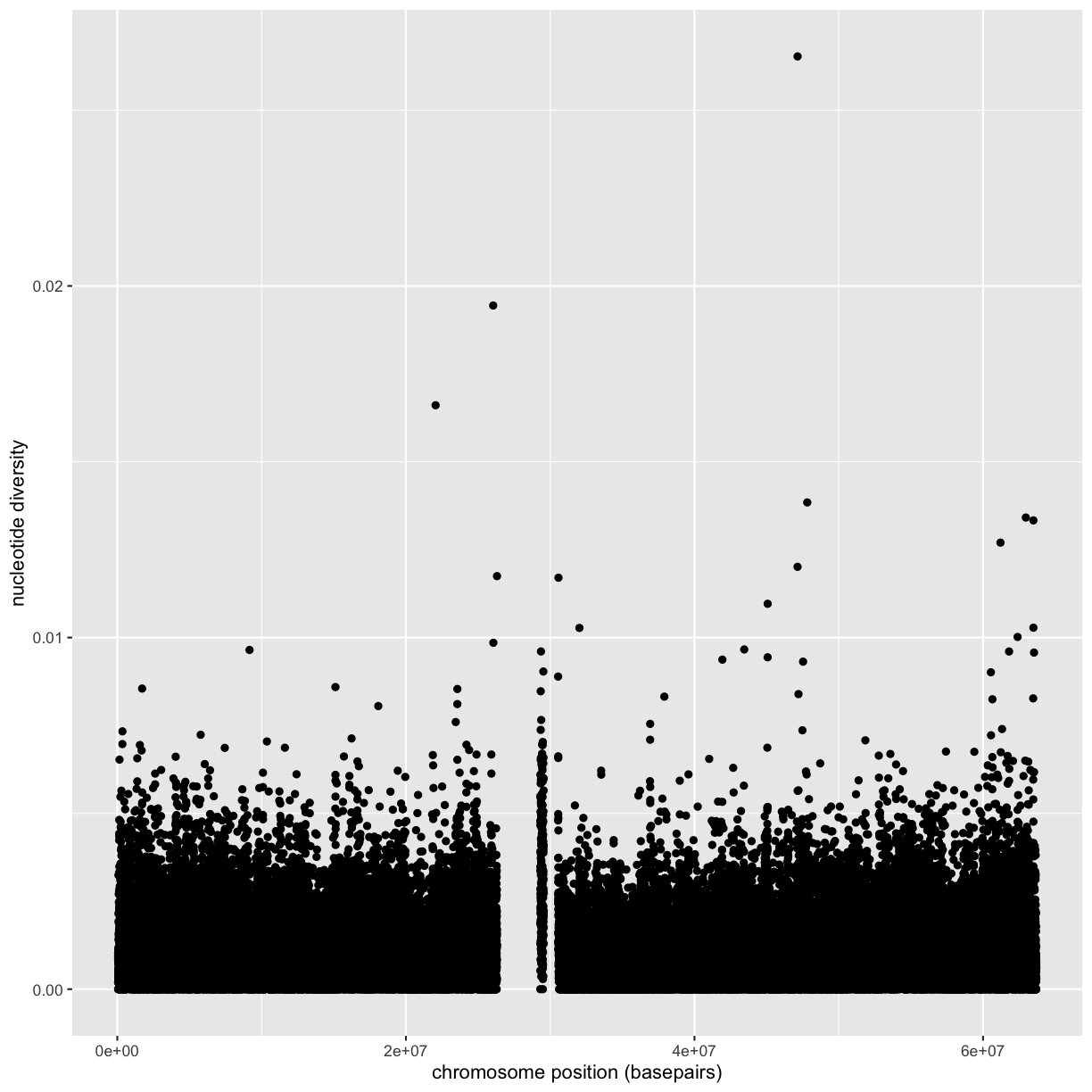

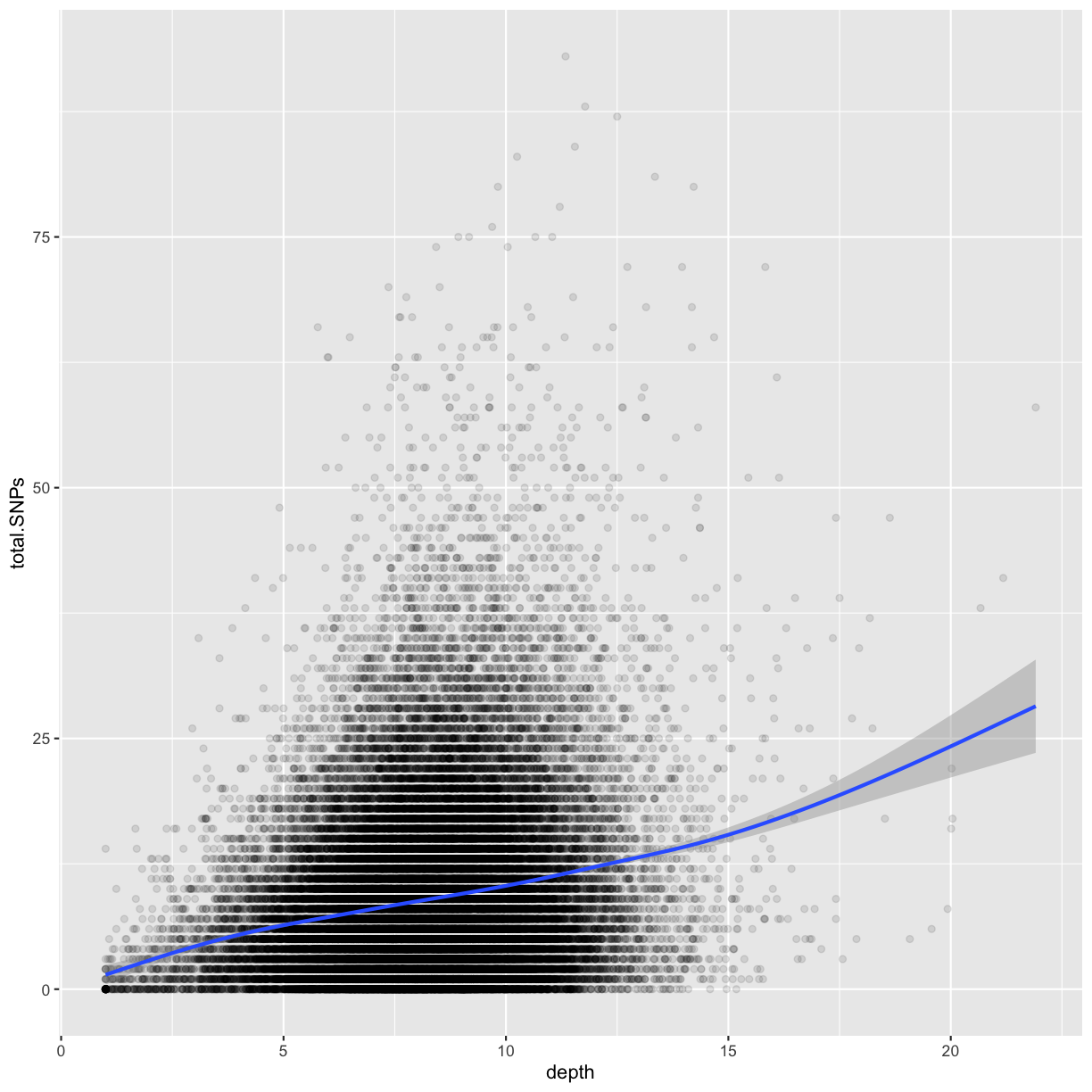

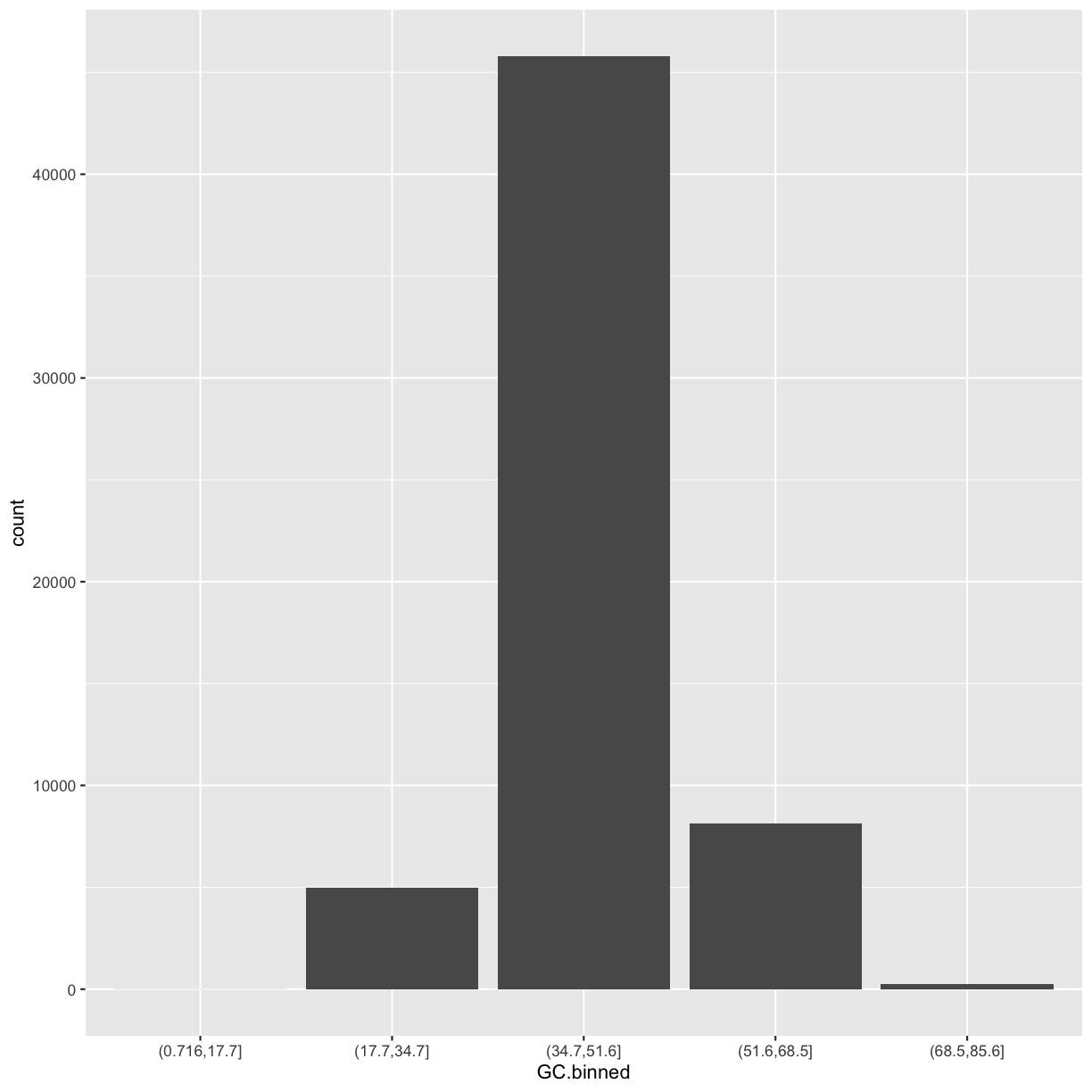

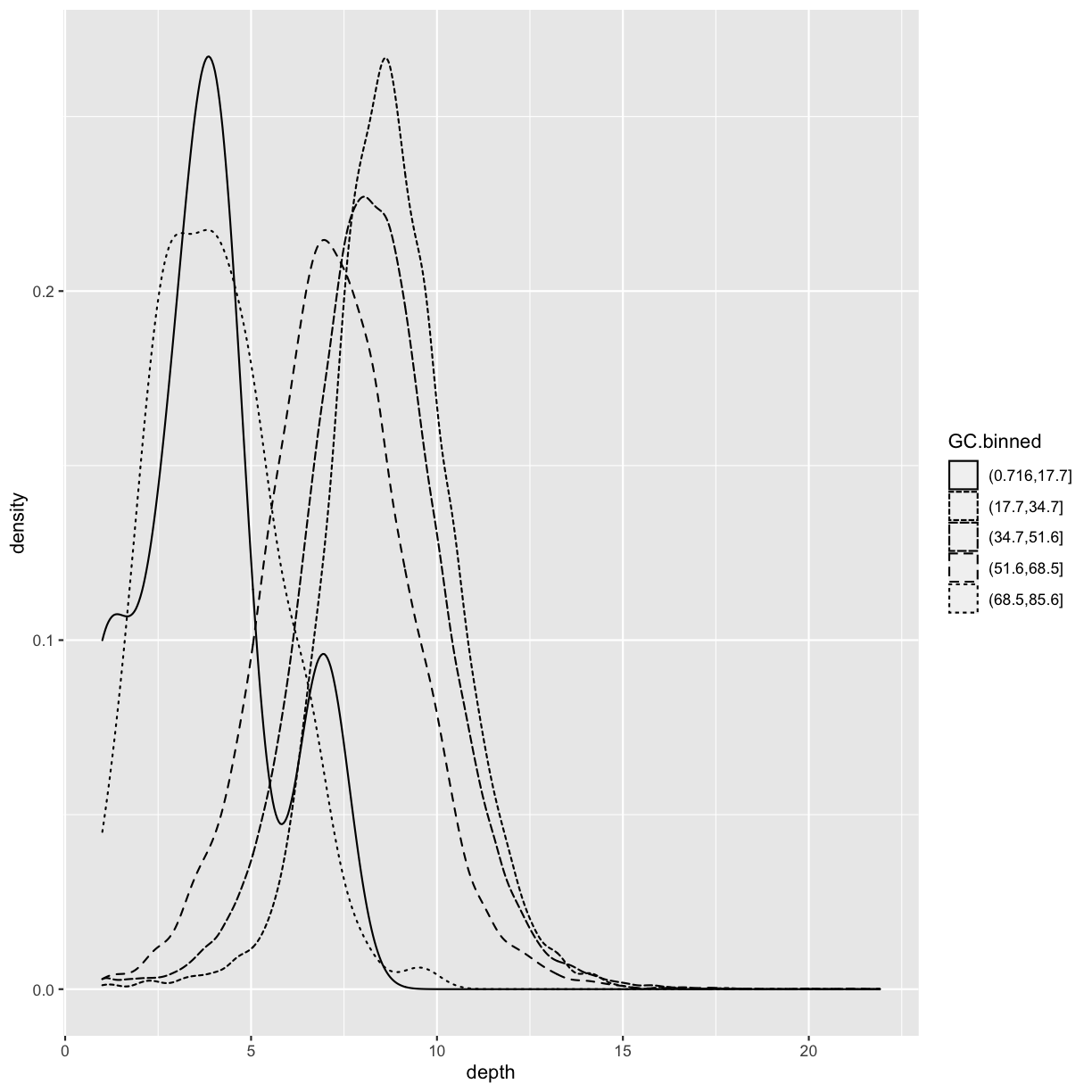

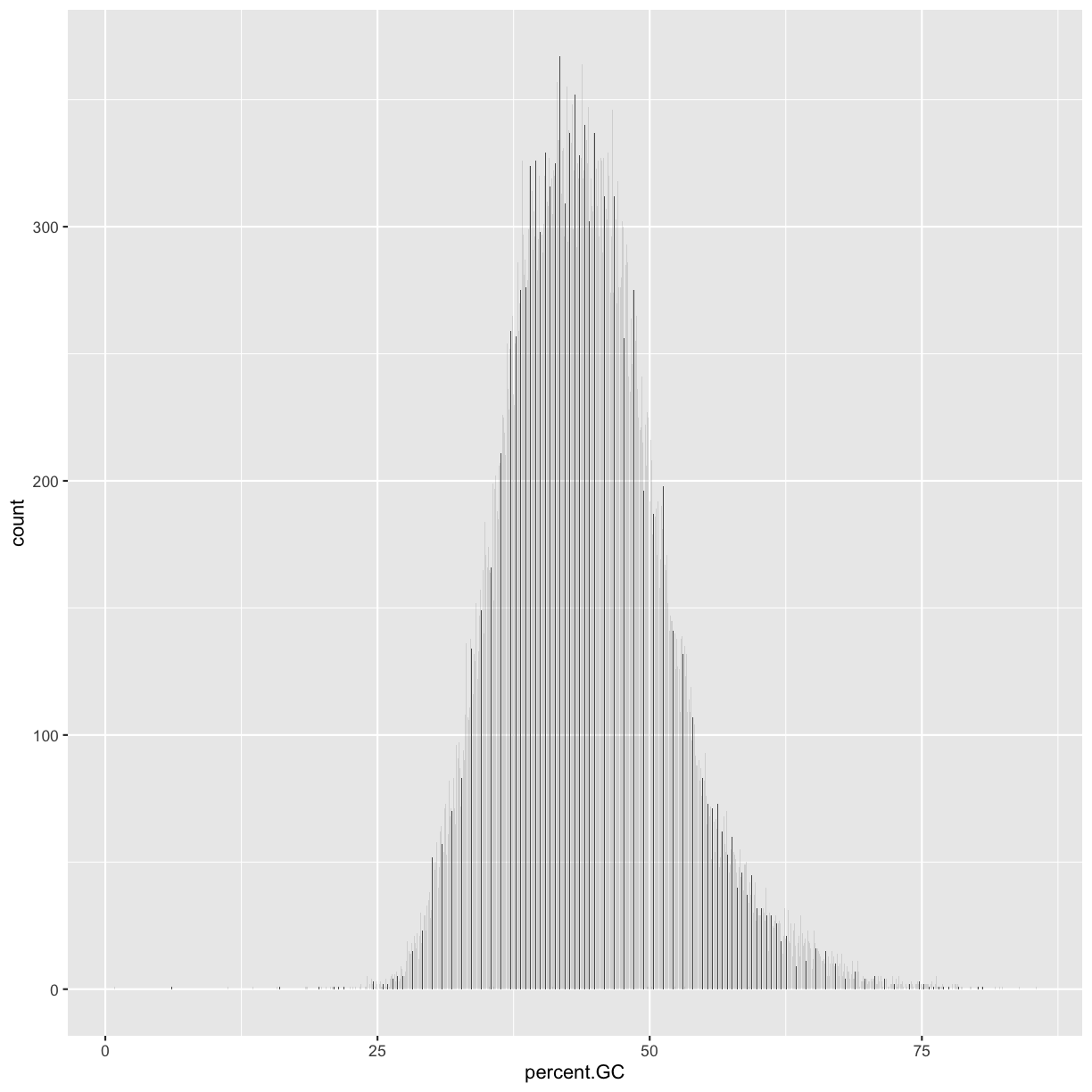

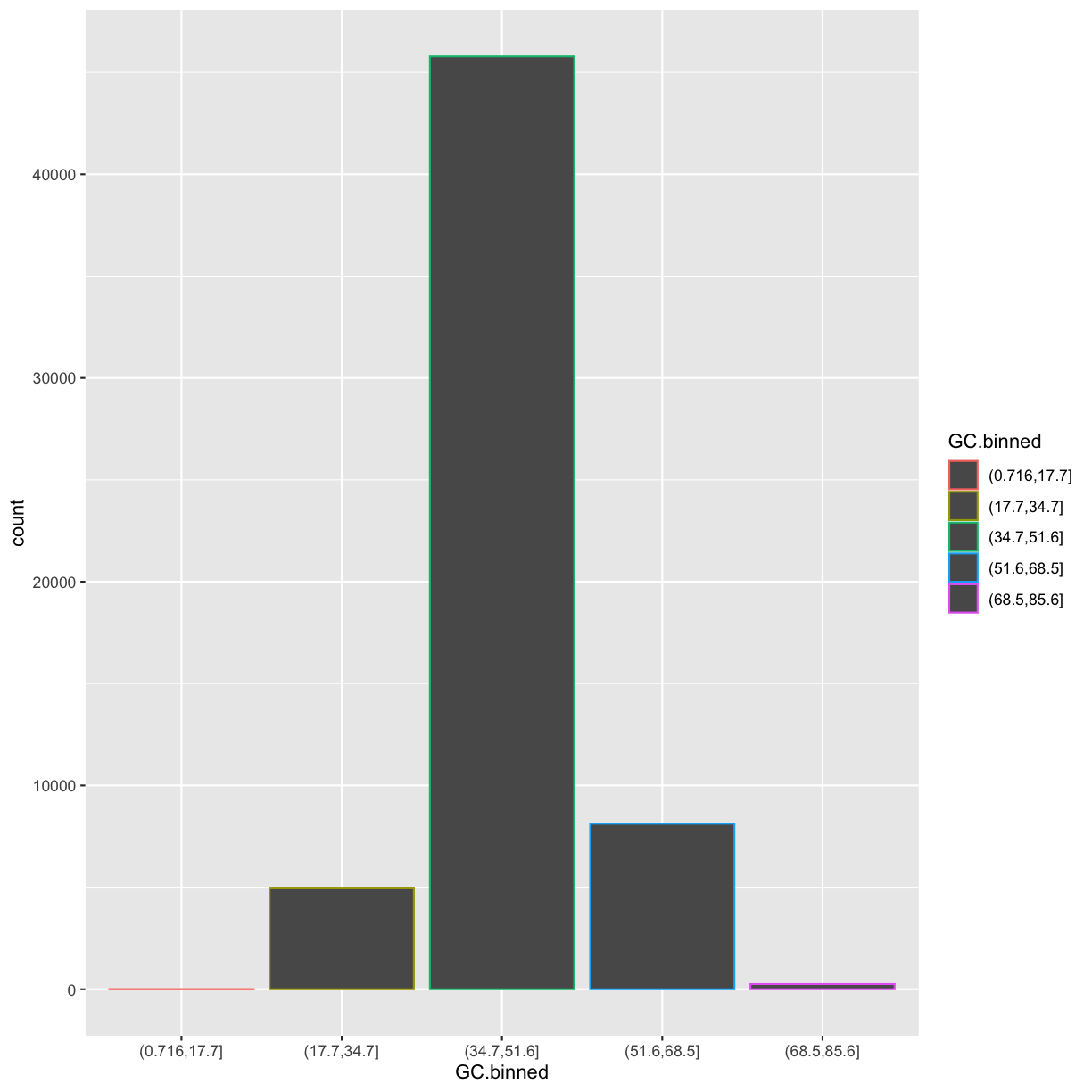

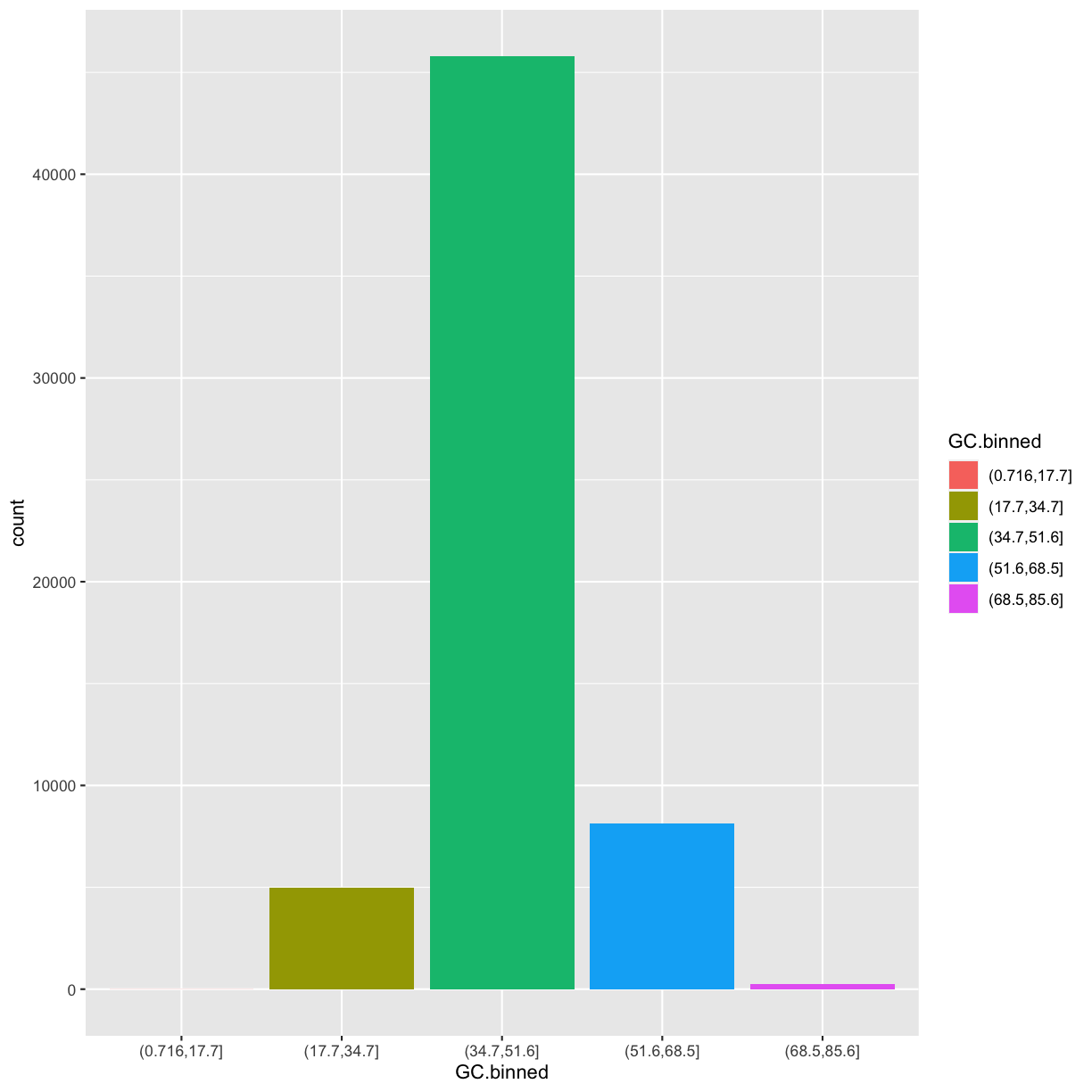

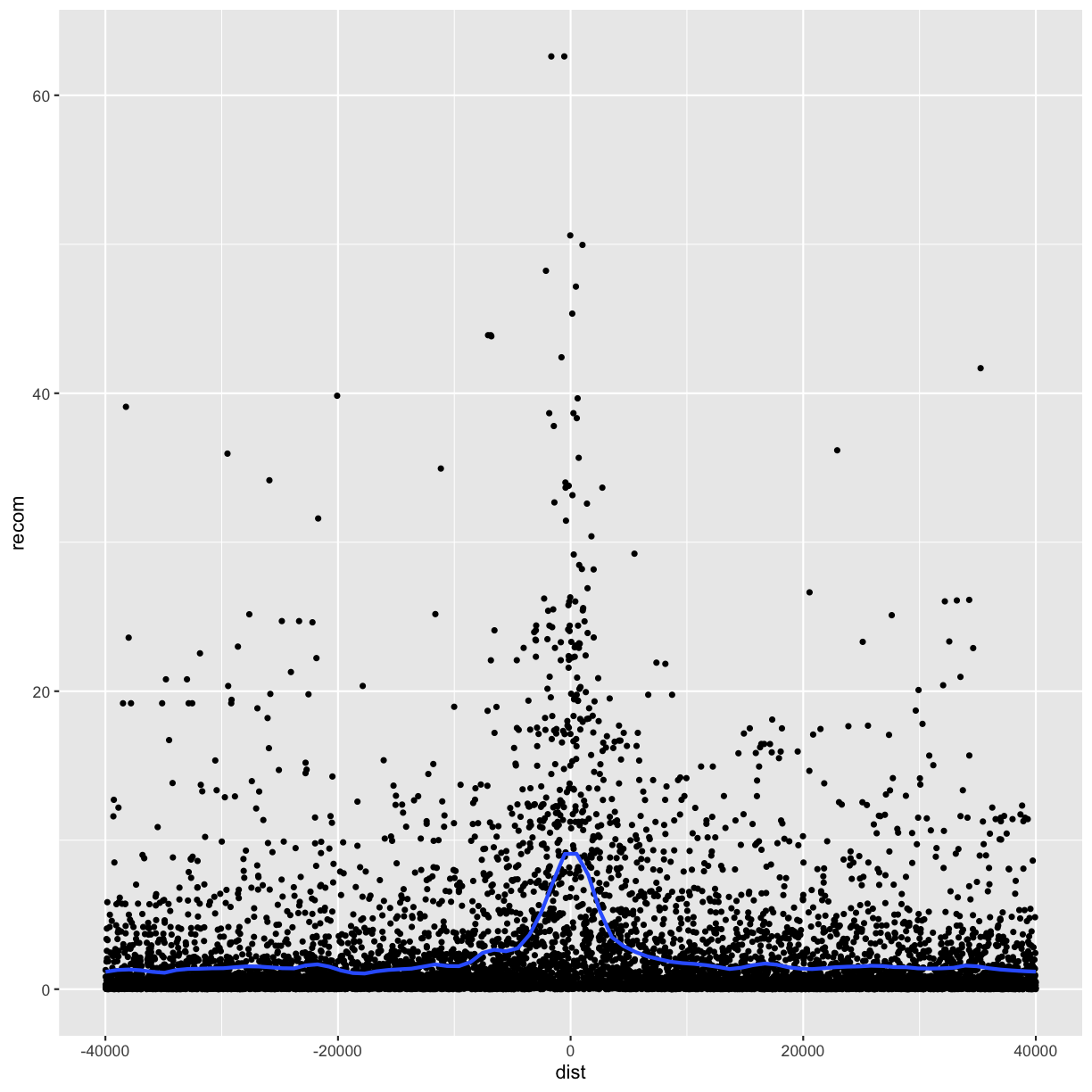

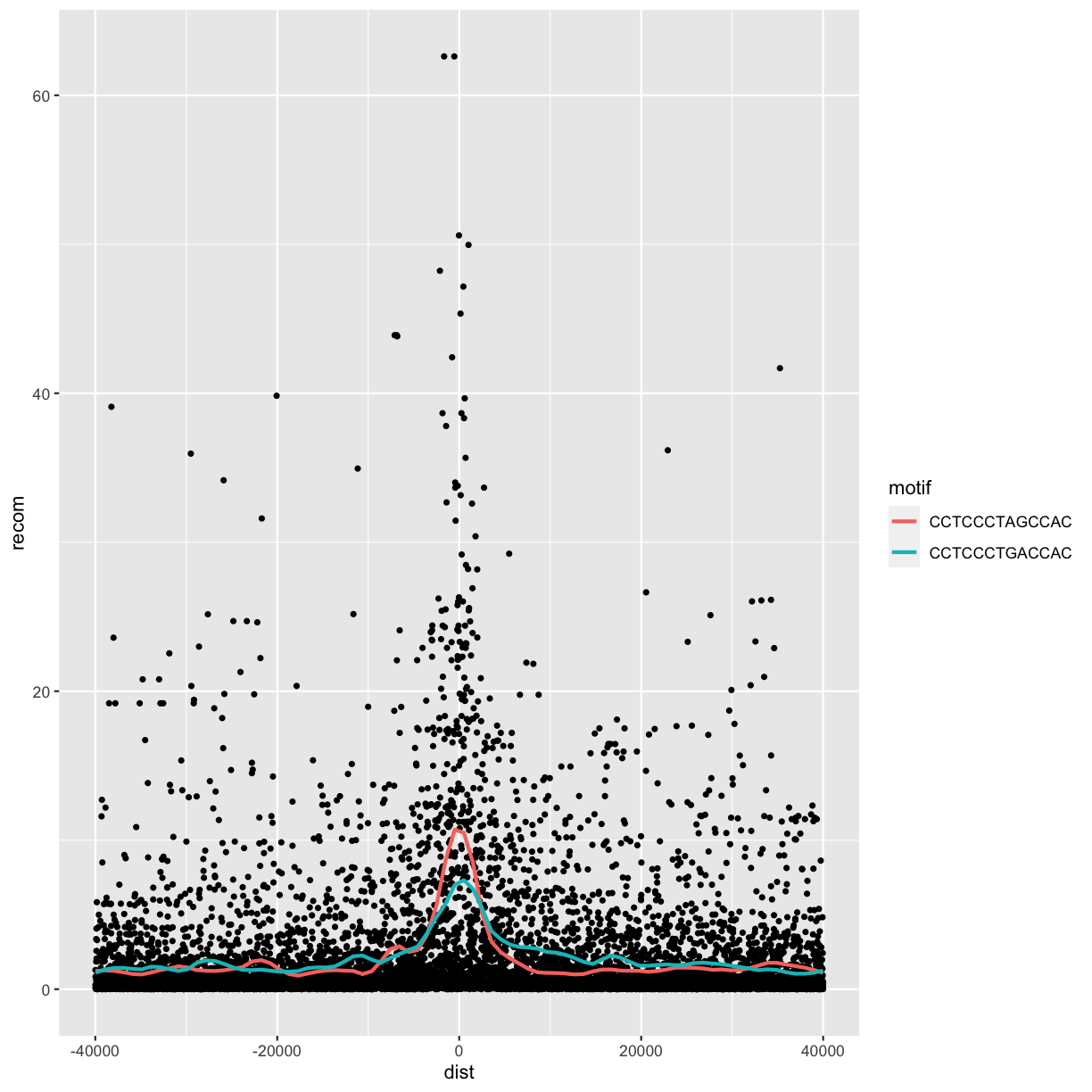

Following chapter 8 of the Buffalo’s book, we will use the dataset Dataset_S1.txt from the paper “The Influence of Recombination on Human Genetic Diversity”. The dataset contains estimates of population genetics statistics such as nucleotide diversity (e.g., the columns Pi and Theta), recombination (column Recombination), and sequence divergence as estimated by percent identity between human and chimpanzee genomes (column Divergence). Other columns contain information about the sequencing depth (depth), and GC content (percent.GC) for 1kb windows in human chromosome 20. We’ll only work with a few columns in our examples; see the description of Dataset_S1.txt in the original paper for more details.

Download the dataset

We can download the Dataset_S1.txt file into a local folder of your choice using

the download.file function. Instead, we will read file directly from the

Internet with the read_csv function:

dvst <- read_csv("https://raw.githubusercontent.com/vsbuffalo/bds-files/master/chapter-08-r/Dataset_S1.txt")

── Column specification ─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

cols(

start = col_double(),

end = col_double(),

`total SNPs` = col_double(),

`total Bases` = col_double(),

depth = col_double(),

`unique SNPs` = col_double(),

dhSNPs = col_double(),

`reference Bases` = col_double(),

Theta = col_double(),

Pi = col_double(),

Heterozygosity = col_double(),

`%GC` = col_double(),

Recombination = col_double(),

Divergence = col_double(),

Constraint = col_double(),

SNPs = col_double()

)

Notice the parsing of columns in the file. Also notice that some of the columns names contain spaces and special characters. We’ll replace them soon to facilitate typing.

We can check the data structure creates with either the str(dvst) command or by

typing dvst:

dvst

# A tibble: 59,140 x 16

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55001 56000 0 1894 3.41 0 0

2 56001 57000 5 6683 6.68 2 2

3 57001 58000 1 9063 9.06 1 0

4 58001 59000 7 10256 10.3 3 2

5 59001 60000 4 8057 8.06 4 0

6 60001 61000 6 7051 7.05 2 1

7 61001 62000 7 6950 6.95 2 1

8 62001 63000 1 8834 8.83 1 0

9 63001 64000 1 9629 9.63 1 0

10 64001 65000 3 7999 8 1 1

# … with 59,130 more rows, and 9 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>

It’s a tibble!. Notice that the tibble only shows the first few rows and all the columns that fit on one screen.

Run View(dvst), which will open the dataset in the RStudio viewer, to see the

whole dataframe.

Also notice that there are some additional variable types available:

| Abbreviation | Variable type |

|---|---|

int |

integers |

dbl |

doubles (real numbers) |

fctr |

factors (categorical) |

| Variables | not in the dataset |

chr |

character vectors (strings) |

dttm |

date-time |

lgl |

logical |

date |

dates |

dplyr basics

There are five key dplyr functions that allow you to solve the vast majority of data manipulation challenges:

Key

dplyrfunctions

Function Action filter()Pick observations by their values arrange()Reorder the rows select()Pick variables by their names mutate()Create new variables with functions of existing variables summarise()Collapse many values down to a single summary

None of these functions perform tasks you can’t accomplish with R’s base functions. But dplyr’s advantage is in the added consistency, speed, and versatility of its data manipulation interface. Get this cheatsheet to keep as a reminder of these and several additional functions.

group_by function

dplyr functions can be used in conjunction with group_by(), which changes

the scope of each function from operating on the entire dataset to operating on

it group-by-group.

Overall command structure

All dplyr functions work similarly:

-

The first argument is a data frame (actually, a

tibble). -

The subsequent arguments describe what to do with the data frame, using the variable names (without quotes).

-

The result is a new data frame.

Together these properties make it easy to chain together multiple simple steps to achieve a complex result.

These six functions provide the verbs for a language of data manipulation. Let’s dive in and see how these verbs work.

Use filter() to filter rows

Let’s say we used summary to get an idea about the total number of SNPs in

different windows:

summary(select(dvst,`total SNPs`)) # Here we also used `select` function. We'll talk about it soon.

total SNPs

Min. : 0.000

1st Qu.: 3.000

Median : 7.000

Mean : 8.906

3rd Qu.:12.000

Max. :93.000

The number varies considerably across all windows on chromosome 20 and the data are right-skewed: the third quartile is 12 SNPs, but the maximum is 93 SNPs. Often we want to investigate such outliers more closely.

We can use filter() to extract the rows of our dataframe based on their values.

The first argument is the name of the data frame. The second and subsequent

arguments are the expressions that filter the data frame.

filter(dvst,`total SNPs` >= 85)

# A tibble: 3 x 16

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 2621001 2622000 93 11337 11.3 13 10

2 13023001 13024000 88 11784 11.8 11 1

3 47356001 47357000 87 12505 12.5 9 7

# … with 9 more variables: reference Bases <dbl>, Theta <dbl>, Pi <dbl>,

# Heterozygosity <dbl>, %GC <dbl>, Recombination <dbl>, Divergence <dbl>,

# Constraint <dbl>, SNPs <dbl>

Questions:

- How many windows have >= 85 SNPs?

- What is a SNP?

We can build more elaborate queries by using multiple filters:

filter(dvst, Pi > 16, `%GC` > 80)

# A tibble: 3 x 16

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 63097001 63098000 5 947 2.39 2 1

2 63188001 63189000 2 1623 3.21 2 0

3 63189001 63190000 5 1395 1.89 3 2

# … with 9 more variables: reference Bases <dbl>, Theta <dbl>, Pi <dbl>,

# Heterozygosity <dbl>, %GC <dbl>, Recombination <dbl>, Divergence <dbl>,

# Constraint <dbl>, SNPs <dbl>

Note, dplyr functions never modify their inputs, so if you want to save the

result, you’ll need to use the assignment operator, <-:

new_df <- filter(dvst, Pi > 16, `%GC` > 80)

Tip

R either prints out the results, or saves them to a variable. If you want to do both, you can wrap the assignment in parentheses:

(new_df <- filter(dvst, Pi > 16, `%GC` > 80))

Comparisons

Use comparison operators to filter effectively:

>, >=, <, <=, != (not equal), and == (equal).

Remember, that == does not work well with real numbers! Use the dplyr near

function instead!

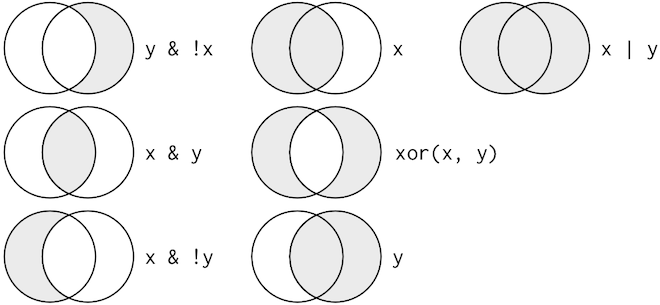

Logical operators

Multiple arguments to filter() are combined with “and”: every expression must

be true in order for a row to be included in the output. For other types of

combinations, you’ll need to use Boolean operators

yourself: & is “and”, | is “or”, and ! is “not”. The figure below shows

the complete set of Boolean operations.

x %in% y operator

If you are looking for multiple values within a certain column, use the x %in% y

operator. This will select every row where x is one of the values in y:

filter(dvst, `total SNPs` %in% c(0,1,2))

# A tibble: 12,446 x 16

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55001 56000 0 1894 3.41 0 0

2 57001 58000 1 9063 9.06 1 0

3 62001 63000 1 8834 8.83 1 0

4 63001 64000 1 9629 9.63 1 0

5 65001 66000 0 8376 8.38 0 0

6 66001 67000 2 8431 8.43 2 0

7 68001 69000 1 5381 5.38 1 0

8 72001 73000 1 5276 5.28 1 0

9 73001 74000 1 6648 6.65 1 0

10 80001 81000 1 6230 6.23 1 0

# … with 12,436 more rows, and 9 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>

Finally, whenever you start using complicated, multipart expressions in filter(),

consider making them explicit variables instead. That makes it much easier to

check your work. We’ll learn how to create new variables shortly.

Missing values

One important feature of R that can make comparison tricky are missing values, or

NAs (“not availables”).NArepresents an unknown value so missing values are “contagious”: almost any operation involving an unknown value will also be unknown.Examples:

NA > 5[1] NA10 == NA[1] NANA + 10[1] NANA / 2[1] NANA == NA[1] NA

filter() only includes rows where the condition is TRUE; it excludes both

FALSE and NA values. If you want

to preserve missing values, ask for them explicitly:

filter(dvst, is.na(Divergence))

Use mutate() to add new variables

It’s often useful to add new columns that are functions of existing columns.

That’s the job of mutate(). mutate() always adds new columns at the end of

your dataset. Remember that when you’re in RStudio, the easiest way to see all

the columns is with View().

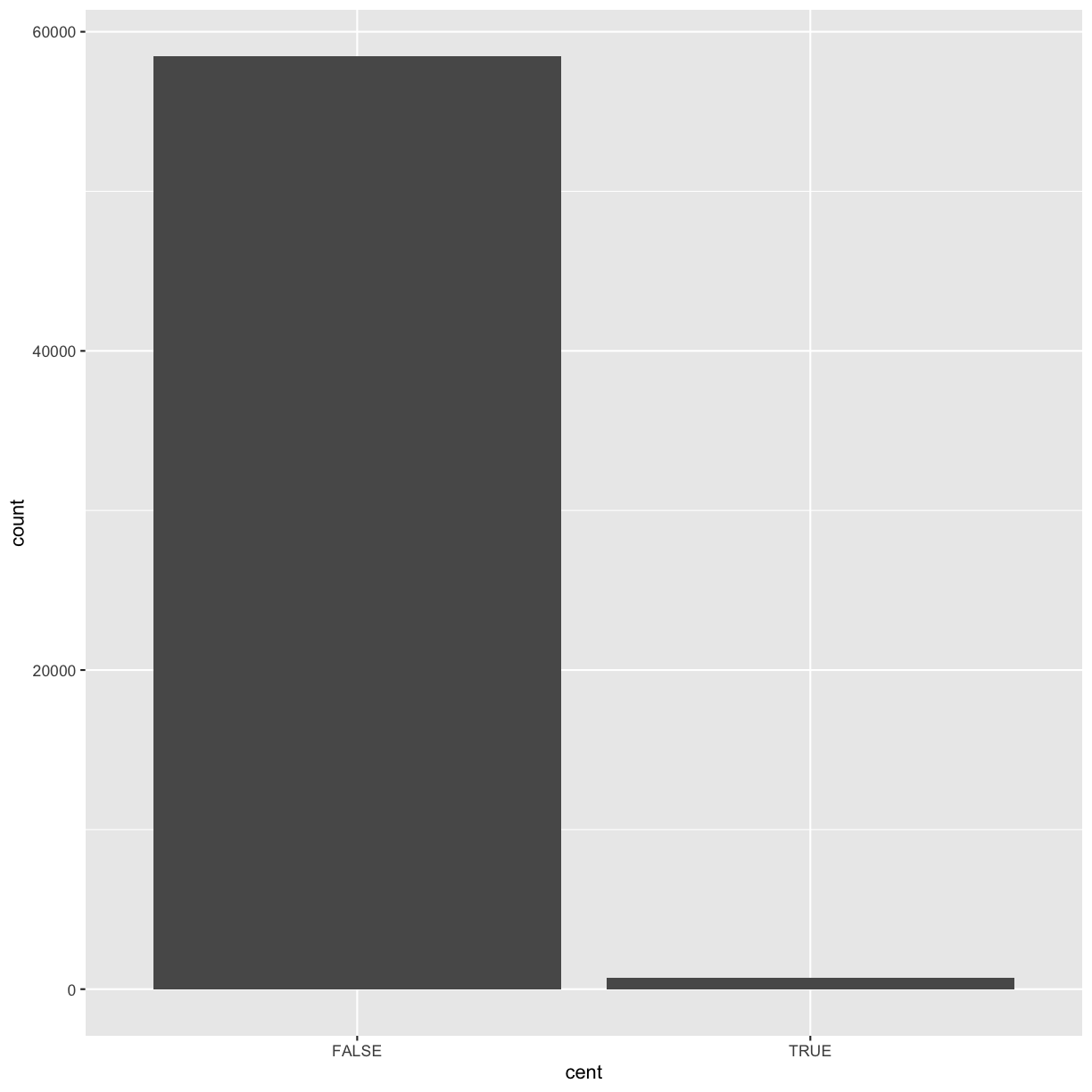

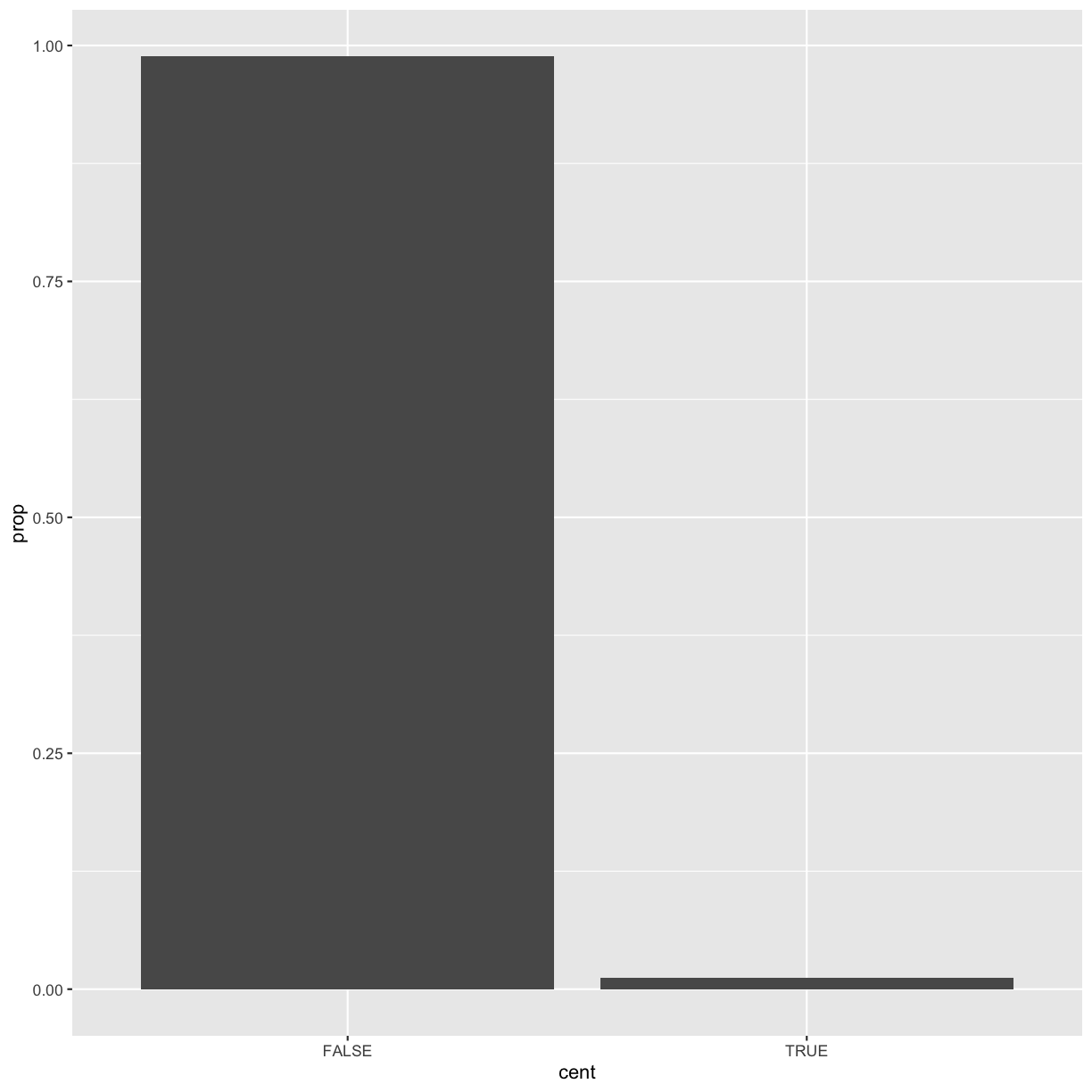

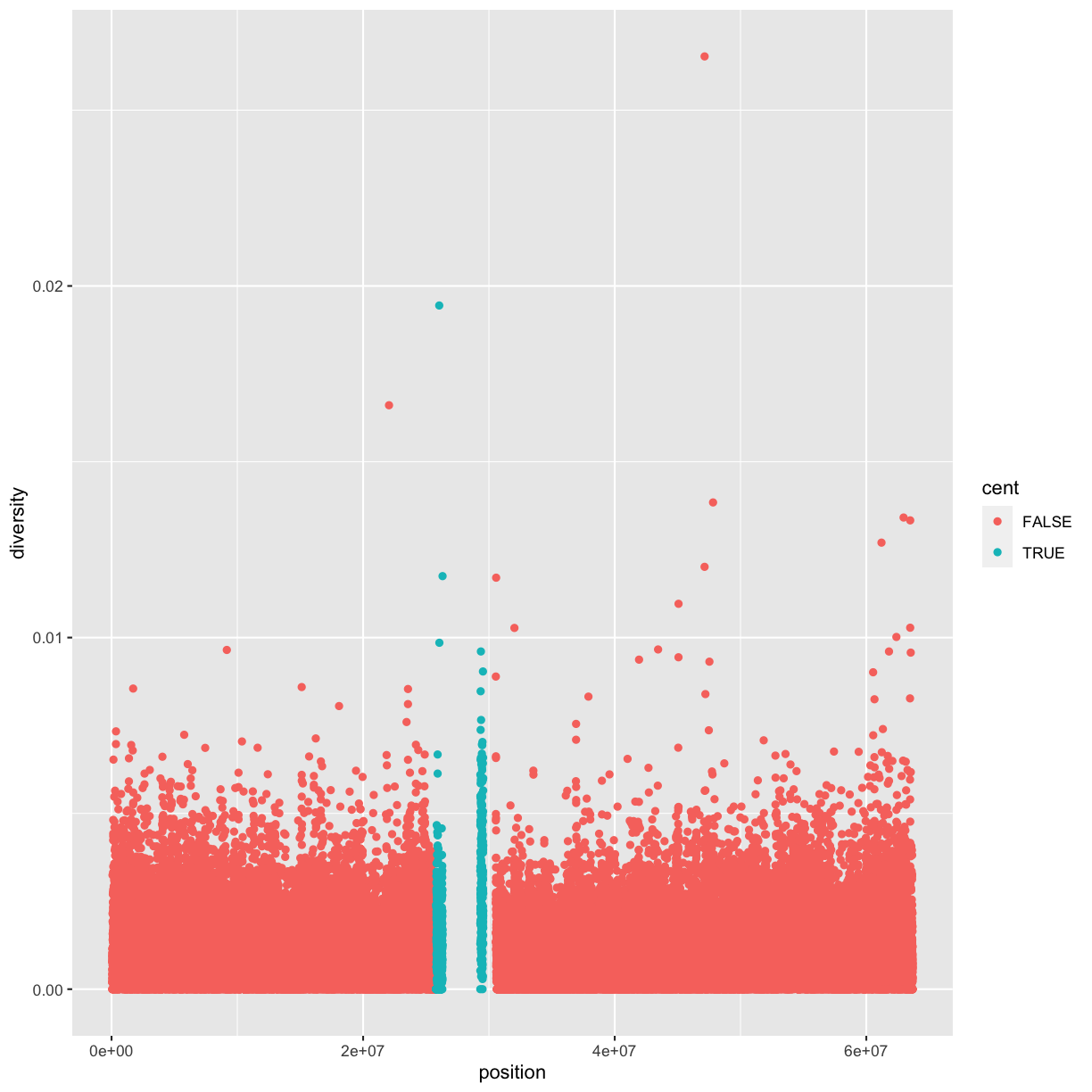

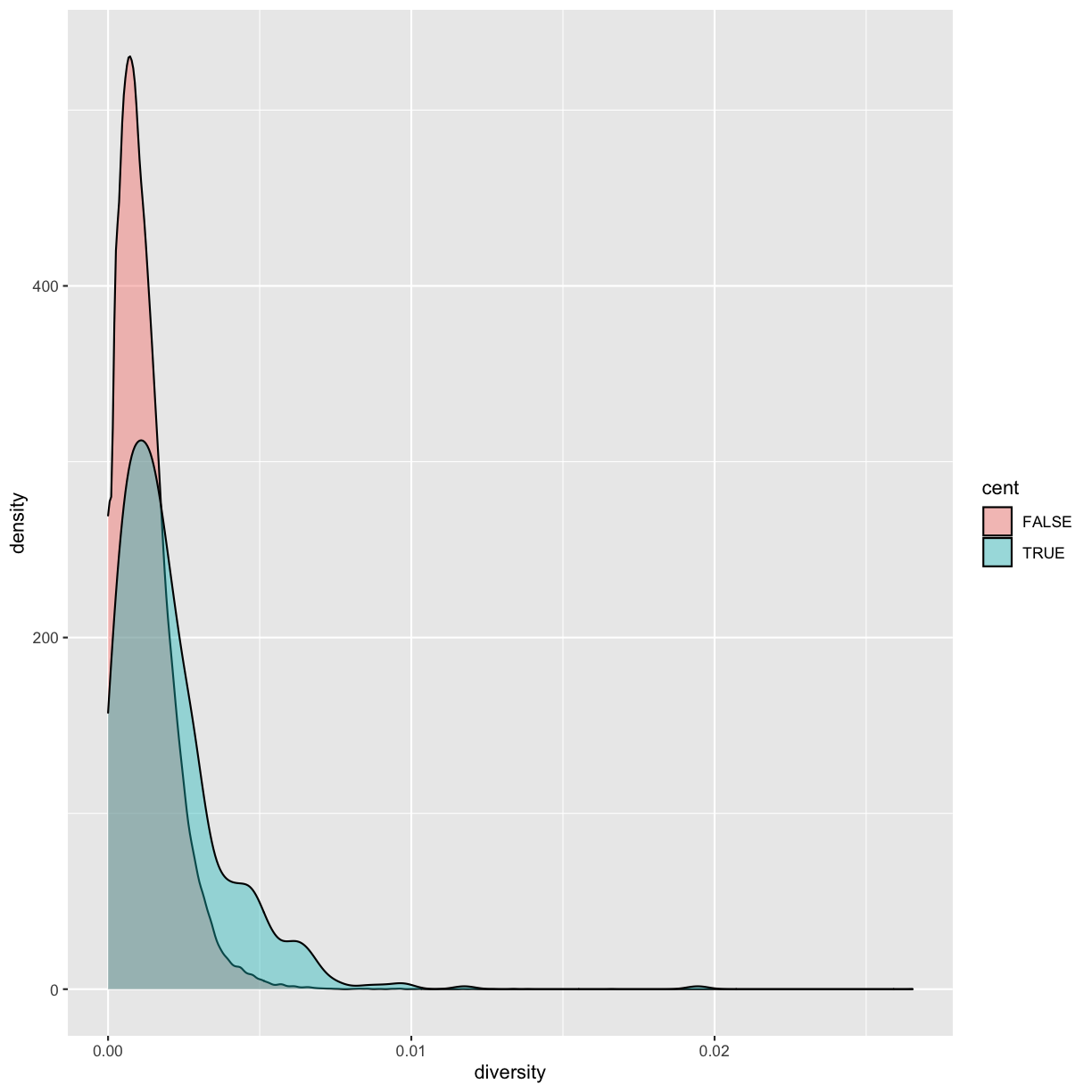

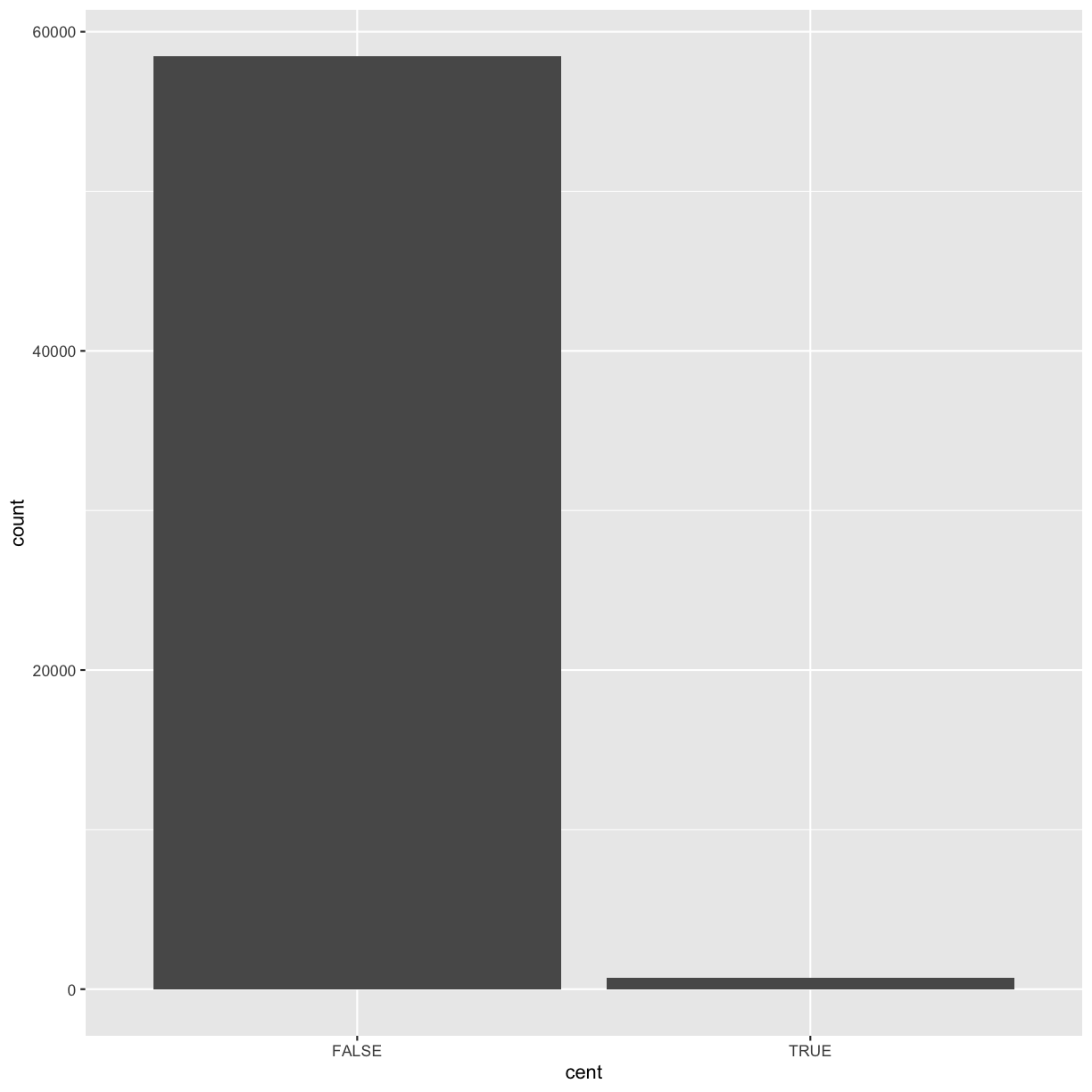

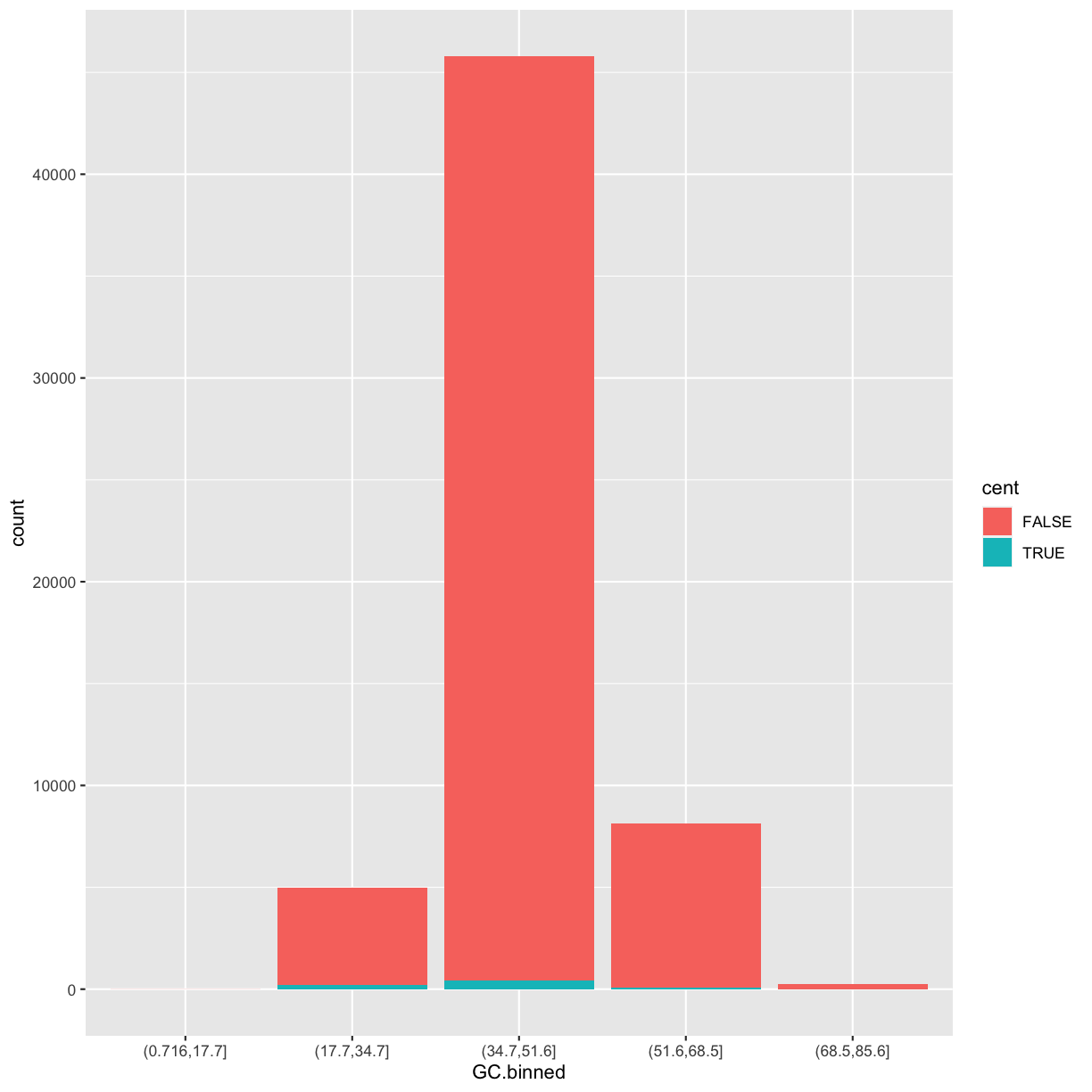

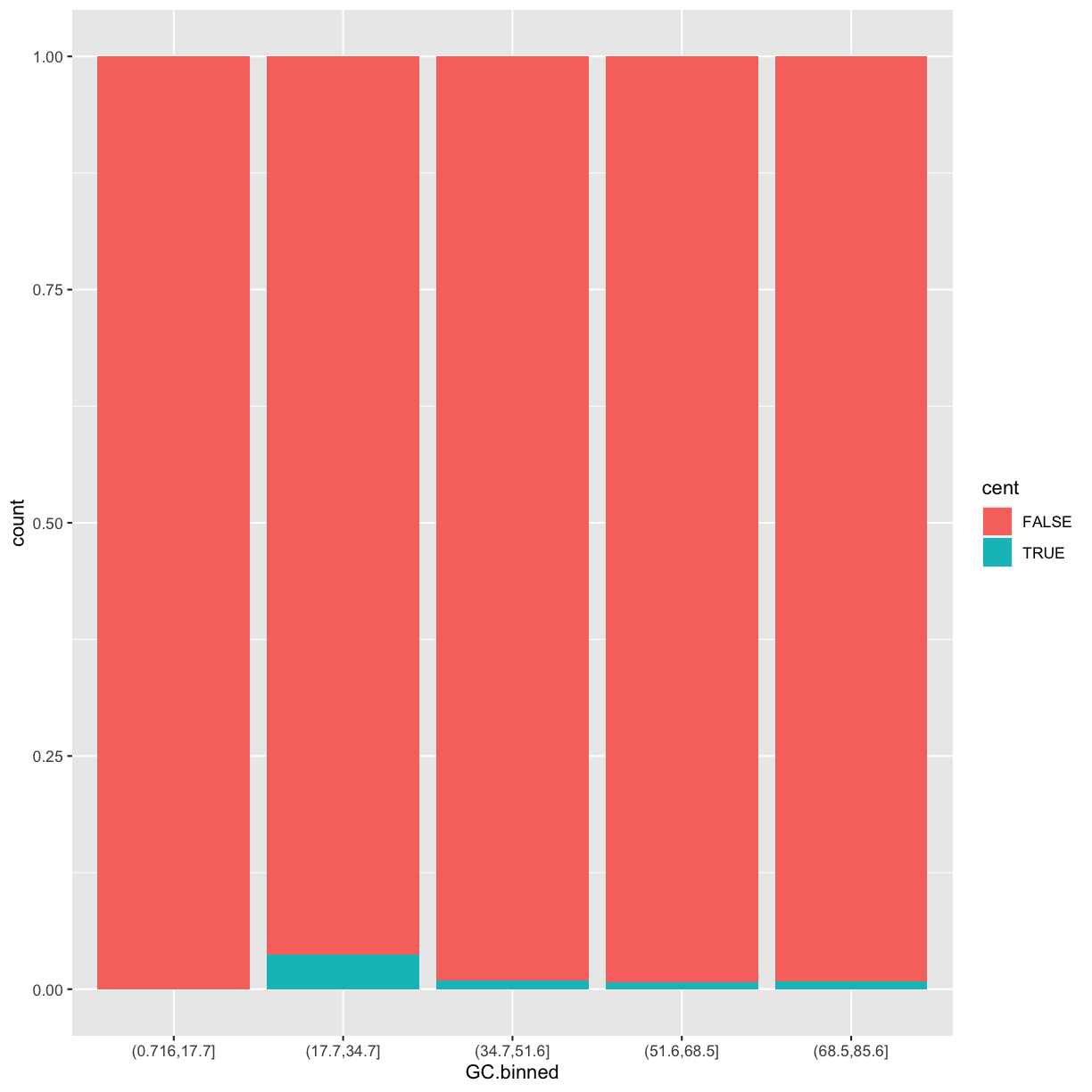

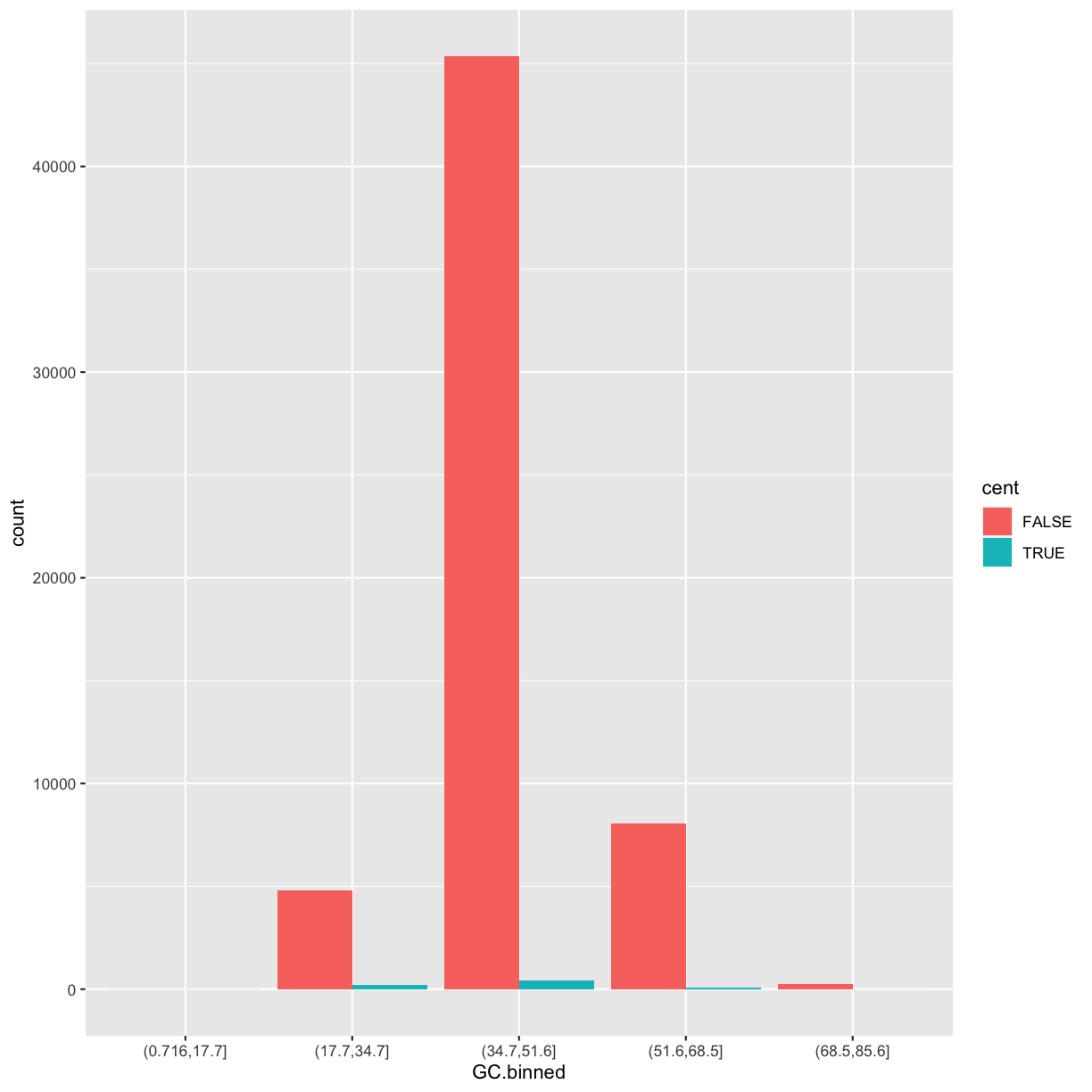

Here we’ll add an additional column that indicates whether a window is in the centromere region (nucleotides 25,800,000 to 29,700,000, based on Giemsa banding; see README for details).

We’ll call it cent and make it logical (with TRUE/FALSE values):

mutate(dvst, cent = start >= 25800000 & end <= 29700000)

# A tibble: 59,140 x 17

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55001 56000 0 1894 3.41 0 0

2 56001 57000 5 6683 6.68 2 2

3 57001 58000 1 9063 9.06 1 0

4 58001 59000 7 10256 10.3 3 2

5 59001 60000 4 8057 8.06 4 0

6 60001 61000 6 7051 7.05 2 1

7 61001 62000 7 6950 6.95 2 1

8 62001 63000 1 8834 8.83 1 0

9 63001 64000 1 9629 9.63 1 0

10 64001 65000 3 7999 8 1 1

# … with 59,130 more rows, and 10 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>,

# cent <lgl>

dvst

# A tibble: 59,140 x 16

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55001 56000 0 1894 3.41 0 0

2 56001 57000 5 6683 6.68 2 2

3 57001 58000 1 9063 9.06 1 0

4 58001 59000 7 10256 10.3 3 2

5 59001 60000 4 8057 8.06 4 0

6 60001 61000 6 7051 7.05 2 1

7 61001 62000 7 6950 6.95 2 1

8 62001 63000 1 8834 8.83 1 0

9 63001 64000 1 9629 9.63 1 0

10 64001 65000 3 7999 8 1 1

# … with 59,130 more rows, and 9 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>

Question:

Why don’t we see the column in the dataset?

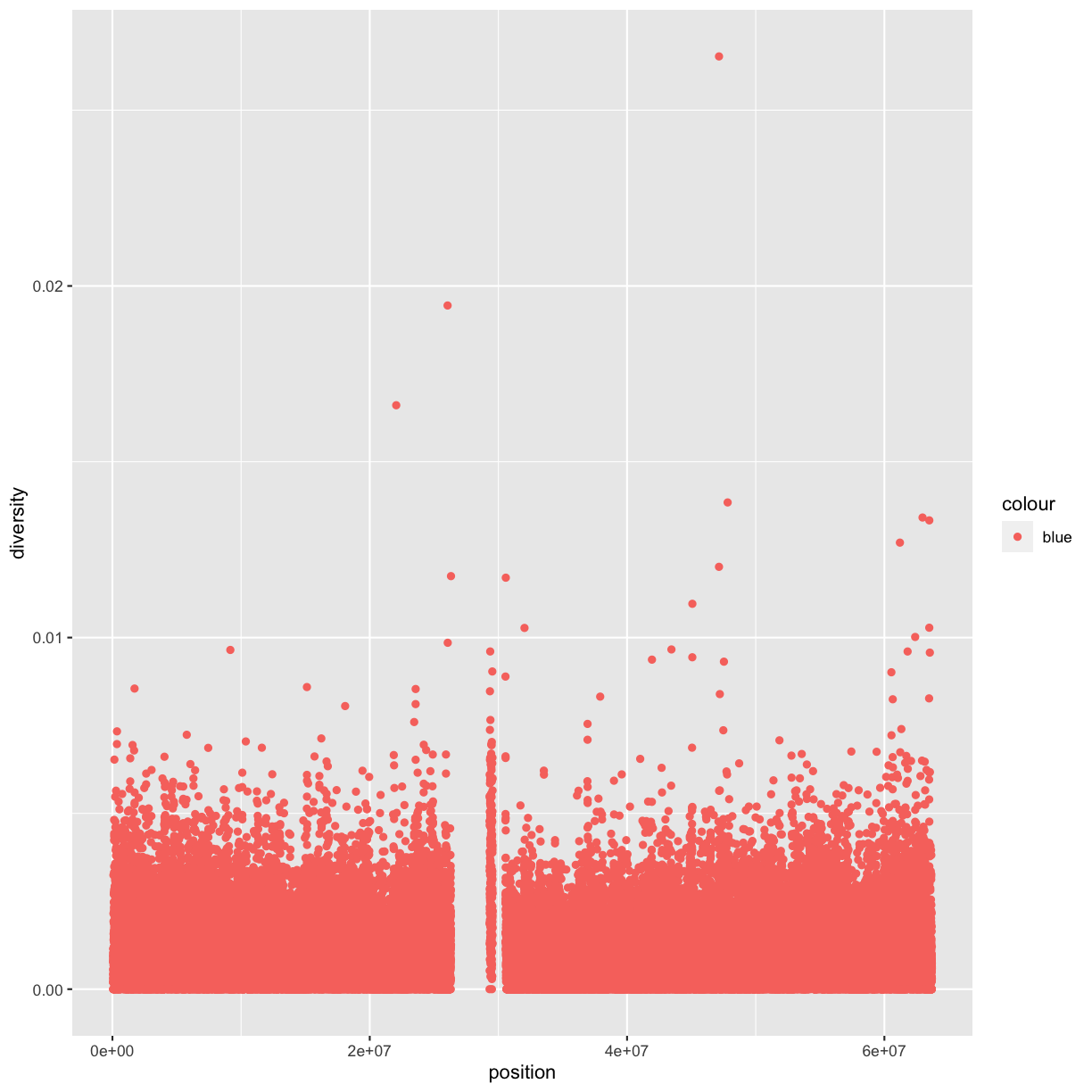

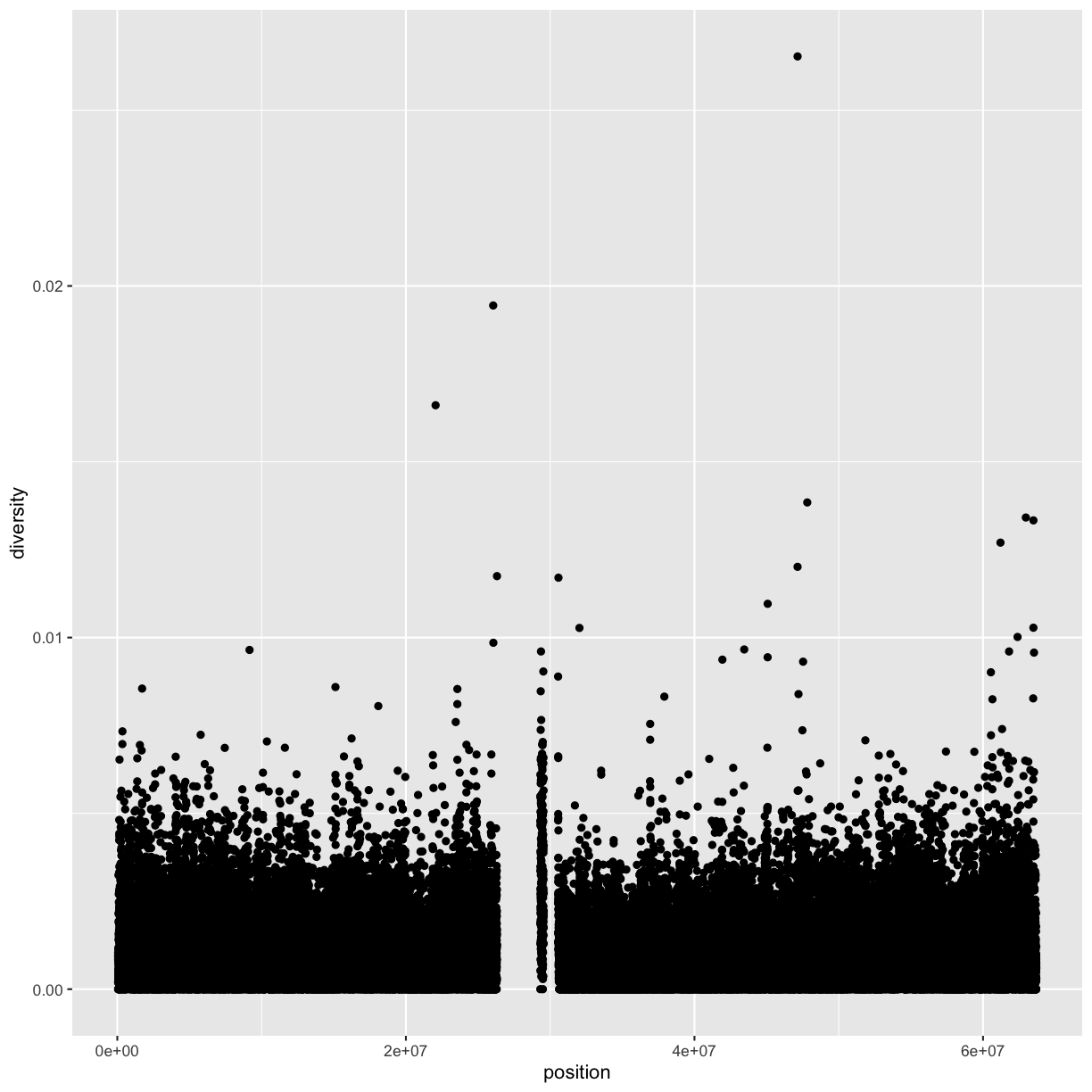

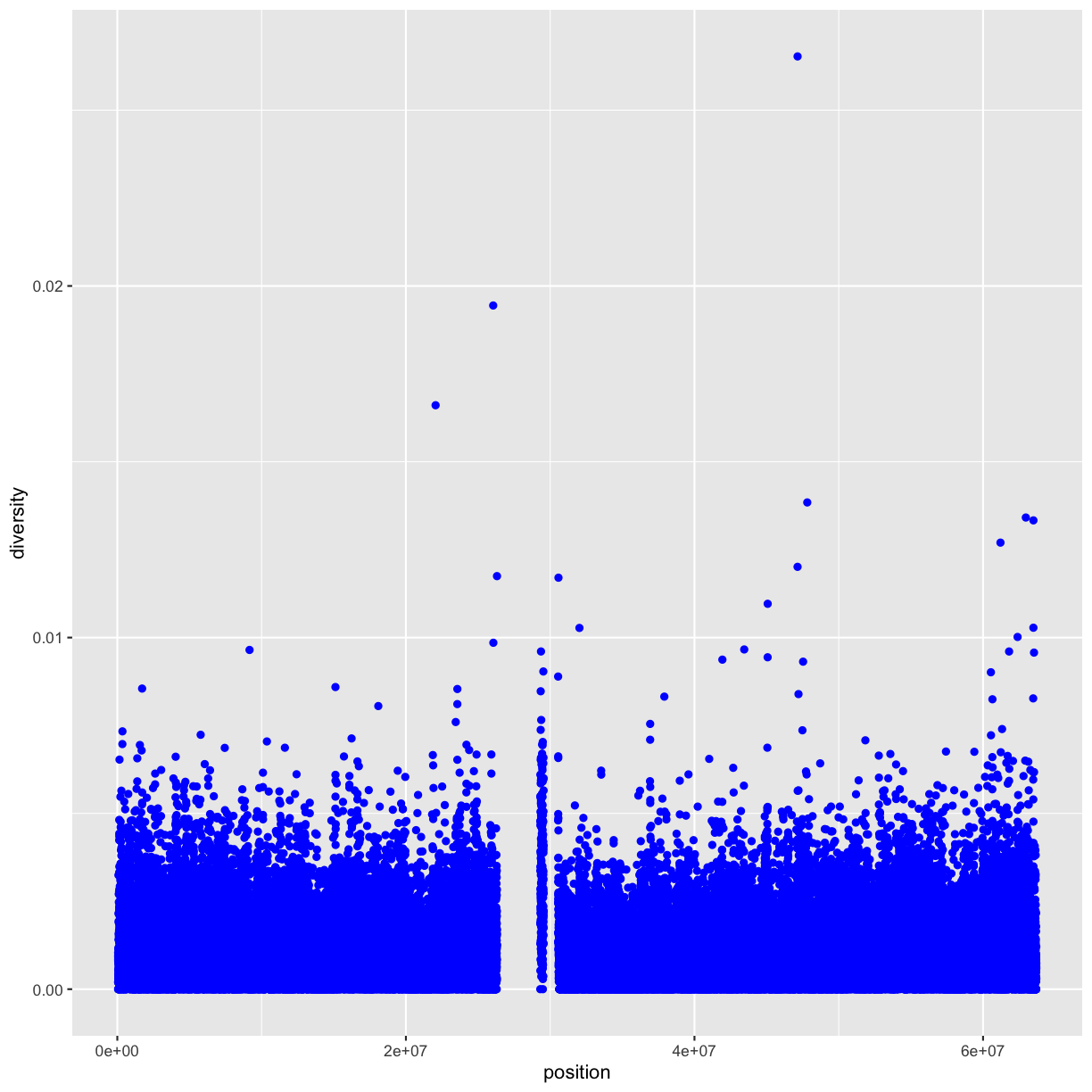

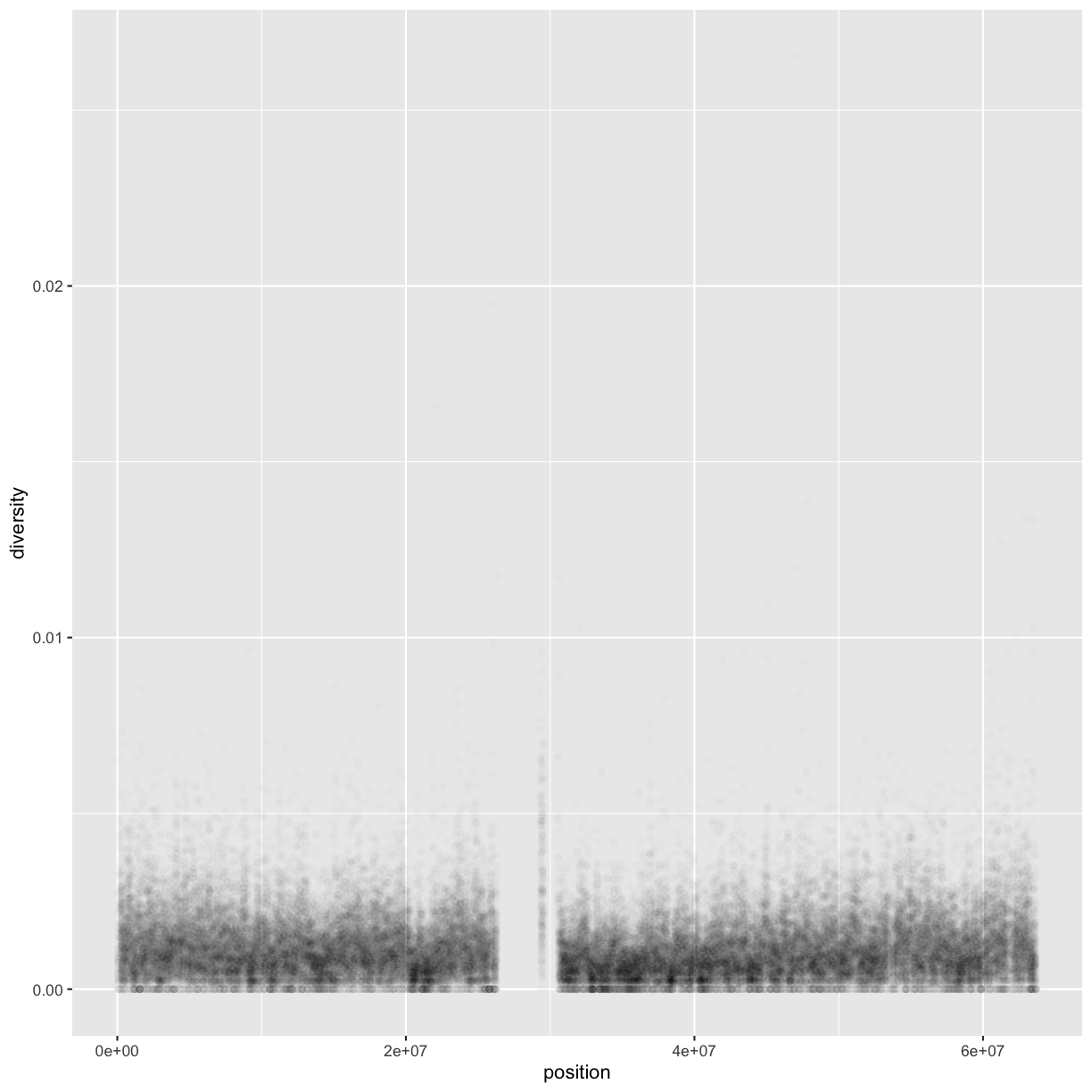

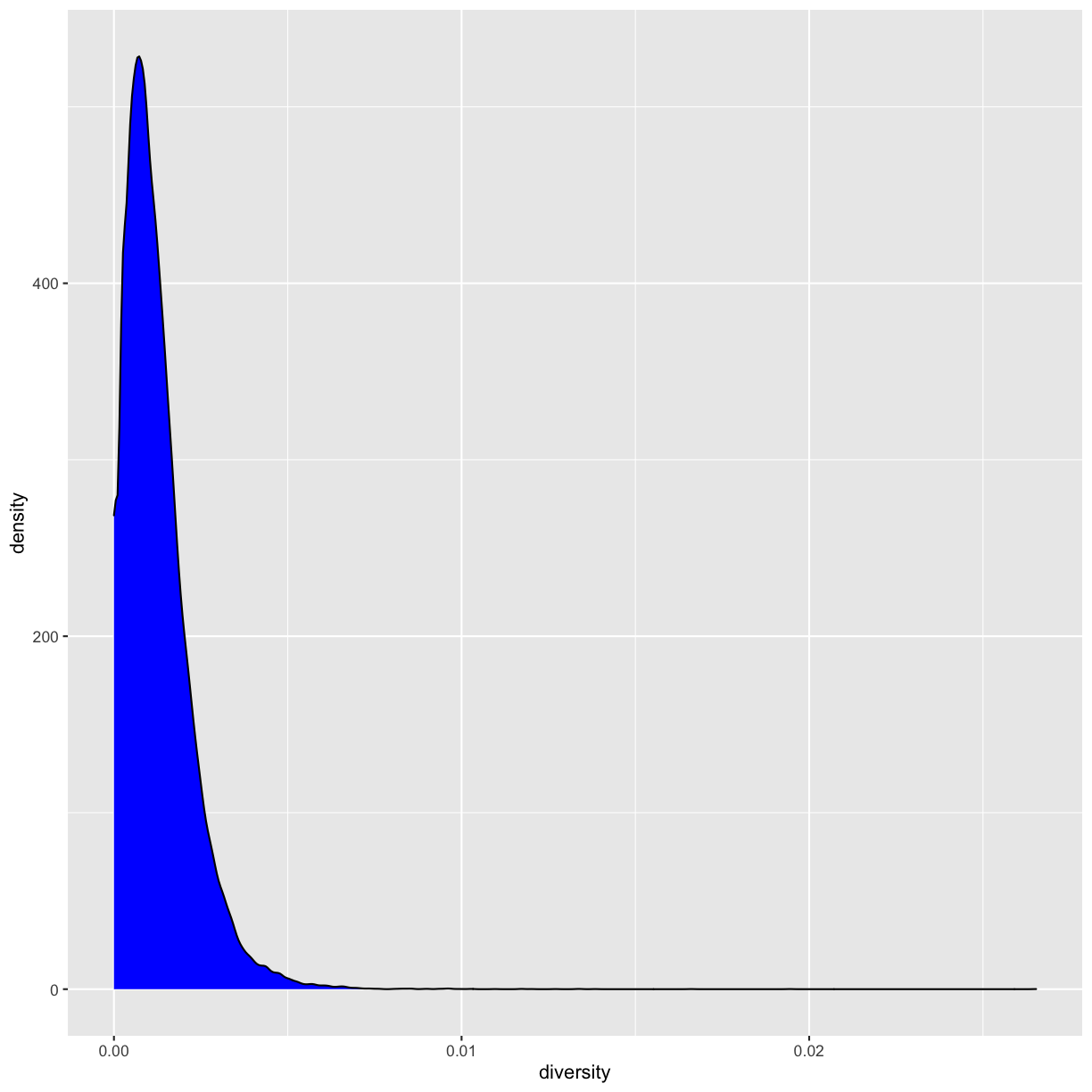

In the dataset we are using, the diversity estimate Pi is measured per sampling window (1kb) and scaled up by 10x (see supplementary Text S1 for more details). It would be useful to have this scaled as per basepair nucleotide diversity (so as to make the scale more intuitive).

mutate(dvst, diversity = Pi / (10*1000)) # rescale, removing 10x and making per bp

# A tibble: 59,140 x 17

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55001 56000 0 1894 3.41 0 0

2 56001 57000 5 6683 6.68 2 2

3 57001 58000 1 9063 9.06 1 0

4 58001 59000 7 10256 10.3 3 2

5 59001 60000 4 8057 8.06 4 0

6 60001 61000 6 7051 7.05 2 1

7 61001 62000 7 6950 6.95 2 1

8 62001 63000 1 8834 8.83 1 0

9 63001 64000 1 9629 9.63 1 0

10 64001 65000 3 7999 8 1 1

# … with 59,130 more rows, and 10 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>,

# diversity <dbl>

Notice, that we can combine the two operations in a single command (and also save the result):

dvst <- mutate(dvst,

diversity = Pi / (10*1000),

cent = start >= 25800000 & end <= 29700000

)

If you only want to keep the new variables, use transmute():

transmute(dvst,

diversity = Pi / (10*1000),

cent = start >= 25800000 & end <= 29700000

)

# A tibble: 59,140 x 2

diversity cent

<dbl> <lgl>

1 0 FALSE

2 0.00104 FALSE

3 0.000199 FALSE

4 0.000956 FALSE

5 0.000851 FALSE

6 0.000912 FALSE

7 0.000806 FALSE

8 0.000206 FALSE

9 0.000188 FALSE

10 0.000541 FALSE

# … with 59,130 more rows

Other creation functions

There are many functions for creating new variables that you can use with

mutate(). The key property is that the function must be vectorised: it must take a vector of values as input, return a vector with the same number of values as output. There’s no way to list every possible function that you might use, but here’s a selection of functions that are frequently useful:

Arithmetic operators:

+,-,*,/,^. These are all vectorised, using the so called “recycling rules”.Modular arithmetic:

%/%(integer division) and%%(remainder), wherex == y * (x %/% y) + (x %% y). Modular arithmetic is a handy tool because it allows you to break integers up into pieces.Logs:

log(),log2(),log10(). Logarithms are an incredibly useful transformation for dealing with data that ranges across multiple orders of magnitude. They also convert multiplicative relationships to additive.Offsets:

lead()andlag()allow you to refer to leading or lagging values. This allows you to compute running differences (e.g.x - lag(x)) or find when values change (x != lag(x)). They are most useful in conjunction withgroup_by(), which you’ll learn about shortly.Cumulative and rolling aggregates: R provides functions for running sums, products, mins and maxes:

cumsum(),cumprod(),cummin(),cummax(); and dplyr providescummean()for cumulative means.Logical comparisons,

<,<=,>,>=,!=, which you learned about earlier. If you’re doing a complex sequence of logical operations it’s often a good idea to store the interim values in new variables so you can check that each step is working as expected.Ranking:

min_rank()does the most usual type of ranking (e.g. 1st, 2nd, 2nd, 4th). The default gives smallest values the small ranks; usedesc(x)to give the largest values the smallest ranks. Ifmin_rank()doesn’t do what you need, look at the variantsrow_number(),dense_rank(),percent_rank(),cume_dist(),ntile(). See their help pages for more details.

Your turn!

- Find all sampling windows …

- with the lowest/highest GC content

- that have 0 total SNPs

- that are incomplete (<1000 bp)

- that have the highest divergence with the chimp

- that have less than 5% GC difference from the mean

- One useful dplyr filtering helper is

between().

- Can you figure out what does it do?

- Use it to simplify the code needed to answer the previous challenges.

- How many windows fall into the centromeric region?

Use arrange() to arrange rows

arrange() works similarly to filter() except that instead of selecting rows,

it changes their order. It takes a data frame and a set of column names (or more

complicated expressions) to order by. If you provide more than one column name,

each additional column will be used to break ties in the values of preceding

columns:

arrange(dvst, cent, `%GC`)

# A tibble: 59,140 x 18

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 43448001 43449000 5 690 2.66 5 0

2 14930001 14931000 19 3620 4.57 7 1

3 37777001 37778000 3 615 1.12 3 0

4 54885001 54886000 1 2298 3.69 1 0

5 54888001 54889000 15 3823 3.87 11 1

6 41823001 41824000 15 6950 6.95 10 4

7 50530001 50531000 10 7293 7.29 2 2

8 39612001 39613000 13 8254 8.25 5 3

9 55012001 55013000 7 7028 7.03 7 0

10 22058001 22059000 4 281 1.2 4 0

# … with 59,130 more rows, and 11 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>,

# diversity <dbl>, cent <lgl>

Use desc() to re-order by a column in descending order:

arrange(dvst, desc(cent), `%GC`)

# A tibble: 59,140 x 18

start end `total SNPs` `total Bases` depth `unique SNPs` dhSNPs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 25869001 25870000 10 7292 7.29 6 2

2 29318001 29319000 2 6192 6.19 2 0

3 29372001 29373000 5 7422 7.42 3 2

4 26174001 26175000 1 7816 7.82 1 0

5 26182001 26183000 2 6630 6.63 1 1

6 25924001 25925000 30 7127 7.13 15 8

7 26155001 26156000 0 9449 9.45 0 0

8 25926001 25927000 21 8197 8.2 13 6

9 29519001 29520000 15 8135 8.14 13 2

10 25901001 25902000 9 9126 9.13 5 2

# … with 59,130 more rows, and 11 more variables: reference Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, %GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>,

# diversity <dbl>, cent <lgl>

Note, that missing values are always sorted at the end!

Use select() to select columns and rename() to rename them

select() is not terribly useful with our data because they only have a few

variables, but you can still get the general idea:

# Select columns by name

select(dvst, start, end, Divergence)

# A tibble: 59,140 x 3

start end Divergence

<dbl> <dbl> <dbl>

1 55001 56000 0.00301

2 56001 57000 0.0180

3 57001 58000 0.00701

4 58001 59000 0.0120

5 59001 60000 0.0240

6 60001 61000 0.0160

7 61001 62000 0.0120

8 62001 63000 0.0150

9 63001 64000 0.00901

10 64001 65000 0.00701

# … with 59,130 more rows

# Select all columns between depth and Pi (inclusive)

select(dvst, depth:Pi)

# A tibble: 59,140 x 6

depth `unique SNPs` dhSNPs `reference Bases` Theta Pi

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 3.41 0 0 556 0 0

2 6.68 2 2 1000 8.01 10.4

3 9.06 1 0 1000 3.51 1.99

4 10.3 3 2 1000 9.93 9.56

5 8.06 4 0 1000 12.9 8.51

6 7.05 2 1 1000 7.82 9.12

7 6.95 2 1 1000 8.60 8.06

8 8.83 1 0 1000 4.01 2.06

9 9.63 1 0 1000 3.37 1.88

10 8 1 1 1000 4.16 5.41

# … with 59,130 more rows

# Select all columns except those from start to percent.GC (inclusive)

select(dvst, -(start:`%GC`))

# A tibble: 59,140 x 6

Recombination Divergence Constraint SNPs diversity cent

<dbl> <dbl> <dbl> <dbl> <dbl> <lgl>

1 0.00960 0.00301 0 0 0 FALSE

2 0.00960 0.0180 0 0 0.00104 FALSE

3 0.00960 0.00701 0 0 0.000199 FALSE

4 0.00960 0.0120 0 0 0.000956 FALSE

5 0.00960 0.0240 0 0 0.000851 FALSE

6 0.00960 0.0160 0 0 0.000912 FALSE

7 0.00960 0.0120 0 0 0.000806 FALSE

8 0.00960 0.0150 0 0 0.000206 FALSE

9 0.00960 0.00901 58 1 0.000188 FALSE

10 0.00958 0.00701 0 1 0.000541 FALSE

# … with 59,130 more rows

select() can also be used to rename variables, but it’s rarely useful because

it drops all of the variables not explicitly mentioned. Instead, use rename(),

which is a variant of select() that keeps all the variables that aren’t

explicitly mentioned:

dvst <- rename(dvst, total.SNPs = `total SNPs`,

total.Bases = `total Bases`,

unique.SNPs = `unique SNPs`,

reference.Bases = `reference Bases`,

percent.GC = `%GC`) #renaming all the columns that require ` `!

colnames(dvst)

[1] "start" "end" "total.SNPs" "total.Bases"

[5] "depth" "unique.SNPs" "dhSNPs" "reference.Bases"

[9] "Theta" "Pi" "Heterozygosity" "percent.GC"

[13] "Recombination" "Divergence" "Constraint" "SNPs"

[17] "diversity" "cent"

Another option is to use select() in conjunction with the everything() helper.

This is useful if you have a handful of variables you’d like to move to the start

of the data frame:

select(dvst, cent, everything())

# A tibble: 59,140 x 18

cent start end total.SNPs total.Bases depth unique.SNPs dhSNPs

<lgl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 FALSE 55001 56000 0 1894 3.41 0 0

2 FALSE 56001 57000 5 6683 6.68 2 2

3 FALSE 57001 58000 1 9063 9.06 1 0

4 FALSE 58001 59000 7 10256 10.3 3 2

5 FALSE 59001 60000 4 8057 8.06 4 0

6 FALSE 60001 61000 6 7051 7.05 2 1

7 FALSE 61001 62000 7 6950 6.95 2 1

8 FALSE 62001 63000 1 8834 8.83 1 0

9 FALSE 63001 64000 1 9629 9.63 1 0

10 FALSE 64001 65000 3 7999 8 1 1

# … with 59,130 more rows, and 10 more variables: reference.Bases <dbl>,

# Theta <dbl>, Pi <dbl>, Heterozygosity <dbl>, percent.GC <dbl>,

# Recombination <dbl>, Divergence <dbl>, Constraint <dbl>, SNPs <dbl>,

# diversity <dbl>

Use summarise() to make summaries

The last key verb is summarise(). It collapses a data frame to a single row:

summarise(dvst, GC = mean(percent.GC, na.rm = TRUE), averageSNPs=mean(total.SNPs,

na.rm = TRUE), allSNPs=sum(total.SNPs))

# A tibble: 1 x 3

GC averageSNPs allSNPs

<dbl> <dbl> <dbl>

1 44.1 8.91 526693

summarise() is not terribly useful unless we pair it with group_by().

This changes the unit of analysis from the complete dataset to individual groups.

Then, when you use the dplyr verbs on a grouped data frame they’ll be

automatically applied “by group”. For example, if we applied exactly the same

code to a data frame grouped by position related to centromere:

by_cent <- group_by(dvst, cent)

summarise(by_cent, GC = mean(percent.GC, na.rm = TRUE), averageSNPs=mean(total.SNPs, na.rm = TRUE), allSNPs=sum(total.SNPs))

# A tibble: 2 x 4

cent GC averageSNPs allSNPs

<lgl> <dbl> <dbl> <dbl>

1 FALSE 44.2 8.87 518743

2 TRUE 39.7 11.6 7950

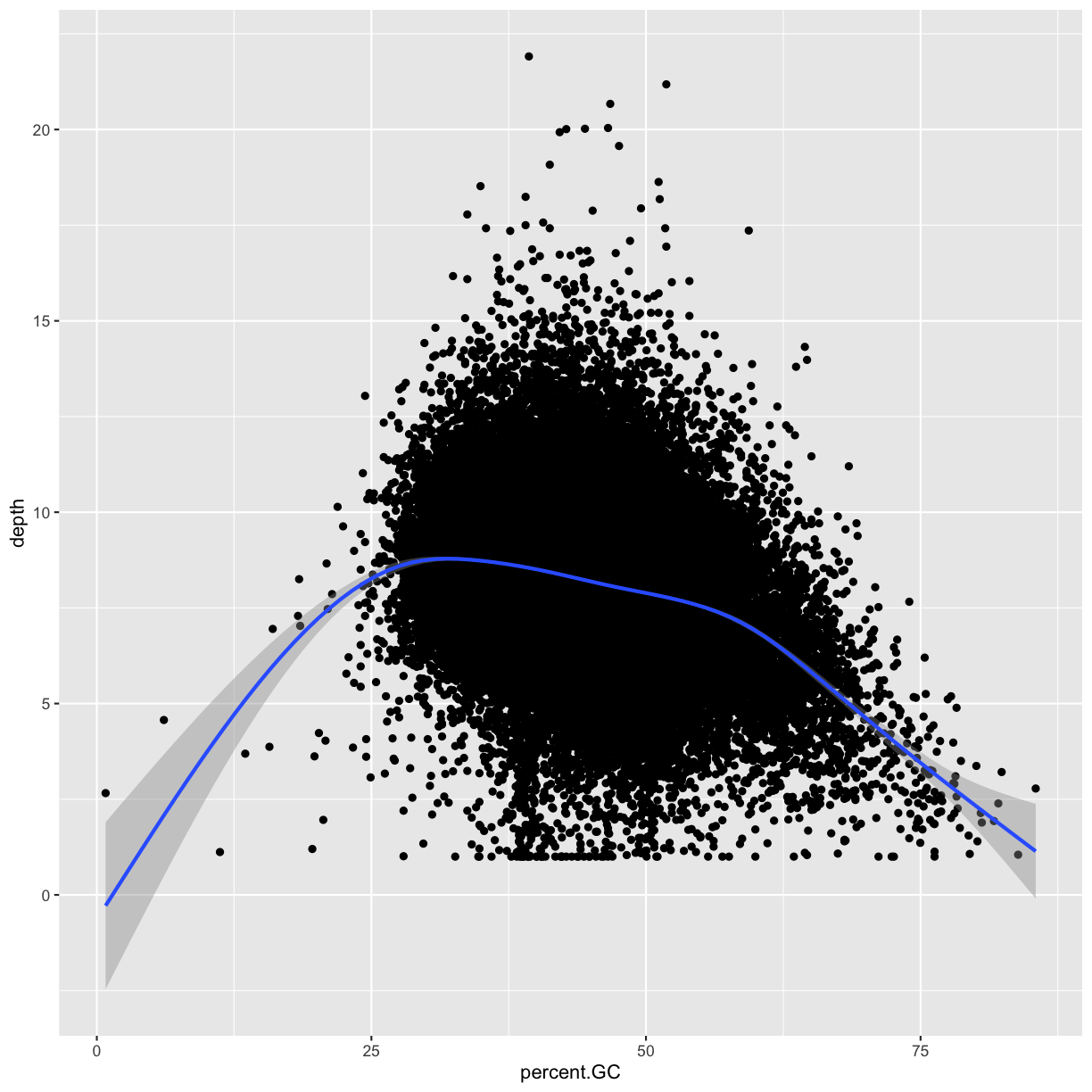

Subsetting columns can be a useful way to summarize data across two different conditions. For example, we might be curious if the average depth in a window (the depth column) differs between very high GC content windows (greater than 80%) and all other windows:

by_GC <- group_by(dvst, percent.GC >= 80)

summarize(by_GC, depth=mean(depth))

# A tibble: 2 x 2

`percent.GC >= 80` depth

<lgl> <dbl>

1 FALSE 8.18

2 TRUE 2.24

This is a fairly large difference, but it’s important to consider how many windows this includes.

Whenever you do any aggregation, it’s always a good idea to include either a count (n()),

or a count of non-missing values (sum(!is.na(x))). That way you can check that you’re not

drawing conclusions based on very small amounts of data:

summarize(by_GC, mean_depth=mean(depth), n_rows=n())

# A tibble: 2 x 3

`percent.GC >= 80` mean_depth n_rows

<lgl> <dbl> <int>

1 FALSE 8.18 59131

2 TRUE 2.24 9

Your turn

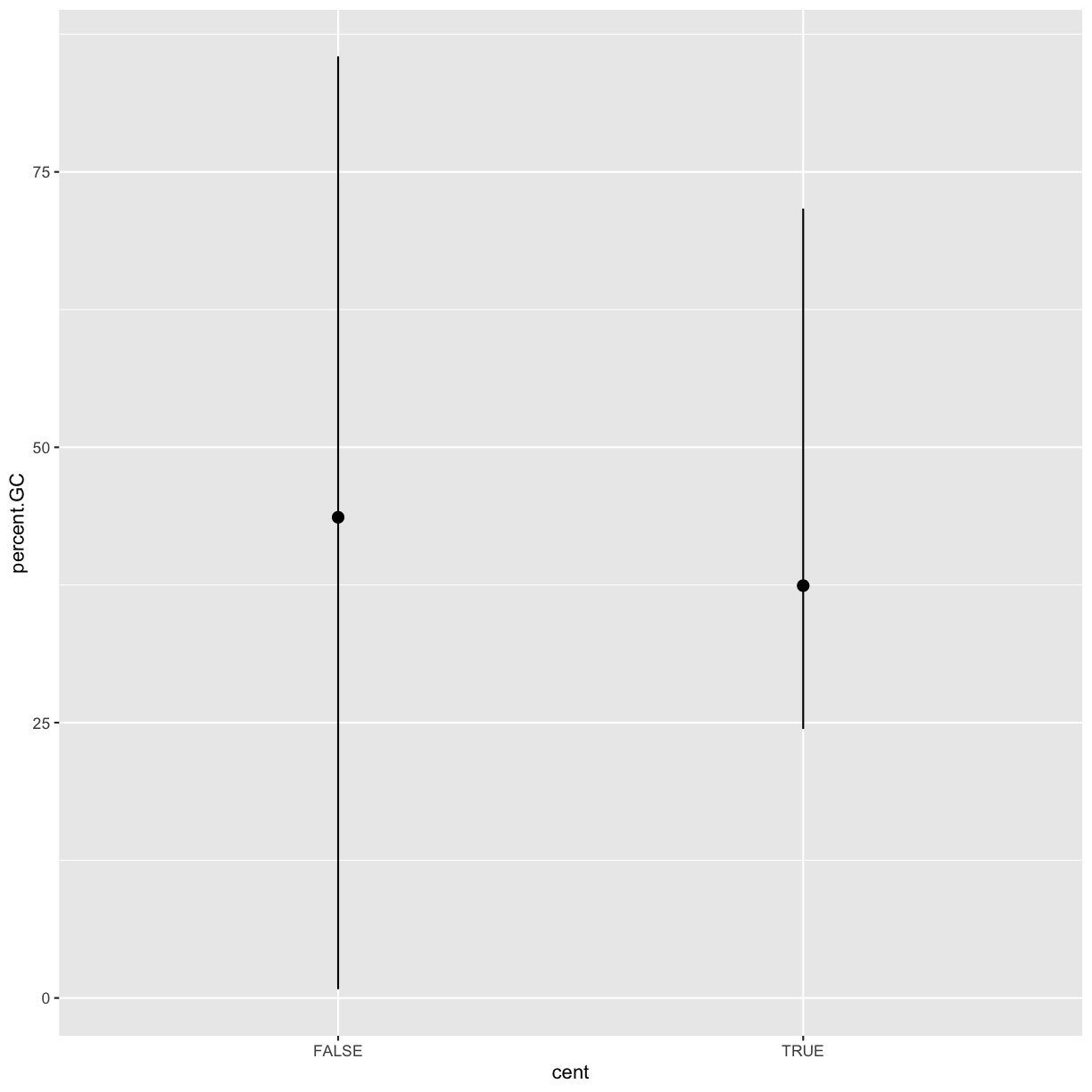

As another example, consider looking at Pi by windows that fall in the centromere and those that do not. Does the centromer have higher nucleotide diversity than other regions in these data?

Solutions

Because cent is a logical vector, we can group by it directly:

by_cent <- group_by(dvst, cent) summarize(by_cent, nt_diversity=mean(diversity), min=which.min(diversity), n_rows=n())# A tibble: 2 x 4 cent nt_diversity min n_rows <lgl> <dbl> <int> <int> 1 FALSE 0.00123 1 58455 2 TRUE 0.00204 1 685Indeed, the centromere does appear to have higher nucleotide diversity than other regions in this data.

Useful summary functions

Just using means, counts, and sum can get you a long way, but R provides many other useful summary functions:

- Measures of location: we’ve used

mean(x), butmedian(x)is also useful.- Measures of spread:

sd(x),IQR(x),mad(x). The mean squared deviation, or standard deviation or sd for short, is the standard measure of spread. The interquartile rangeIQR()and median absolute deviationmad(x)are robust equivalents that may be more useful if you have outliers.- Measures of rank:

min(x),quantile(x, 0.25),max(x). Quantiles are a generalisation of the median. For example,quantile(x, 0.25)will find a value ofxthat is greater than 25% of the values, and less than the remaining 75%.- Measures of position:

first(x),nth(x, 2),last(x). These work similarly tox[1],x[2], andx[length(x)]but let you set a default value if that position does not exist (i.e. you’re trying to get the 3rd element from a group that only has two elements).- Counts: You’ve seen

n(), which takes no arguments, and returns the size of the current group. To count the number of non-missing values, usesum(!is.na(x)). To count the number of distinct (unique) values, usen_distinct(x).- Counts and proportions of logical values:

sum(x > 10),mean(y == 0). When used with numeric functions,TRUEis converted to 1 andFALSEto 0. This makessum()andmean()very useful:sum(x)gives the number ofTRUEs inx, andmean(x)gives the proportion.

Together group_by() and summarise() provide one of the tools that you’ll use

most commonly when working with dplyr: grouped summaries. But before we go any

further with this, we need to introduce a powerful new idea: the pipe.

Combining multiple operations with the pipe

It looks like we have been creating and naming several intermediate files in our analysis, even though we didn’t care about them. Naming things is hard, so this slows down our analysis.

There’s another way to tackle the same problem with the pipe, %>%:

dvst %>%

rename(GC.percent = percent.GC) %>%

group_by(GC.percent >= 80) %>%

summarize(mean_depth=mean(depth, na.rm = TRUE), n_rows=n())

# A tibble: 2 x 3

`GC.percent >= 80` mean_depth n_rows

<lgl> <dbl> <int>

1 FALSE 8.18 59131

2 TRUE 2.24 9

This focuses on the transformations, not what’s being transformed.

You can read it as a series of imperative statements: group, then summarise, then

filter,

where %>% stands for “then”.

Behind the scenes, x %>% f(y) turns into f(x, y), and x %>% f(y) %>% g(z)

turns into g(f(x, y), z) and so on.

Working with the pipe is one of the key criteria for belonging to the tidyverse.

The only exception is ggplot2: it was written before the pipe was discovered.

However, the next iteration of ggplot2, ggvis, is supposed to use the pipe.

RStudio Tip

A shortcut for

%>%is available in the newest RStudio releases under the keybinding CTRL + SHIFT + M (or CMD + SHIFT + M for OSX).

You can also review the set of available keybindings when within RStudio with the ALT + SHIFT + K.

Missing values

You may have wondered about the

na.rmargument we used above. What happens if we don’t set it? We may get a lot of missing values! That’s because aggregation functions obey the usual rule of missing values: if there’s any missing value in the input, the output will be a missing value. Fortunately, all aggregation functions have anna.rmargument which removes the missing values prior to computation: We could have also removed all the rows that have uknown position value prior to the analysis with thefilter(!is.na())command

Finally, one of the best features of dplyr is that all of these same methods also work with database connections. For example, you can manipulate a SQLite database with all of the same verbs we’ve used here.

Extra reading: tidy data

The collection tidyverse that we’ve been using is a universe of operation on tidy data. But what is tidy data?

Tidy data is a standard way of storing data where:

- Every column is variable.

- Every row is an observation.

- Every cell is a single value.

If you ensure that your data is tidy, you’ll spend less time fighting with the tools and more time working on your analysis. Learn more about tidy data in vignette(“tidy-data”).

tidyrpackage withintidyversehelps you create tidy datatidyr functions fall into five main categories:

- Pivotting which converts between long and wide forms. tidyr 1.0.0 introduces

pivot_longer()andpivot_wider(), replacing the olderspread()andgather()functions.- Rectangling, which turns deeply nested lists (as from JSON) into tidy tibbles.

- Nesting converts grouped data to a form where each group becomes a single row containing a nested data frame, and unnesting does the opposite.

- Splitting and combining character columns. Use

separate()andextract()to pull a single character column into multiple columns; useunite()to combine multiple columns into a single character column.- Handling missing values: Make implicit missing values explicit with

complete(); make explicit missing values implicit withdrop_na(); replace missing values with next/previous value withfill(), or a known value withreplace_na().

See https://tidyr.tidyverse.org/ for more info.Pivot Longer

pivot_longer()makes datasets longer by increasing the number of rows and decreasing the number of columns.

pivot_longer()is commonly needed to tidy wild-caught datasets as they often optimise for ease of data entry or ease of comparison rather than ease of analysis.Here is an example of an untidy dataset:

relig_income# A tibble: 18 x 11 religion `<$10k` `$10-20k` `$20-30k` `$30-40k` `$40-50k` `$50-75k` `$75-100k` <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> 1 Agnostic 27 34 60 81 76 137 122 2 Atheist 12 27 37 52 35 70 73 3 Buddhist 27 21 30 34 33 58 62 4 Catholic 418 617 732 670 638 1116 949 5 Don’t k… 15 14 15 11 10 35 21 6 Evangel… 575 869 1064 982 881 1486 949 7 Hindu 1 9 7 9 11 34 47 8 Histori… 228 244 236 238 197 223 131 9 Jehovah… 20 27 24 24 21 30 15 10 Jewish 19 19 25 25 30 95 69 11 Mainlin… 289 495 619 655 651 1107 939 12 Mormon 29 40 48 51 56 112 85 13 Muslim 6 7 9 10 9 23 16 14 Orthodox 13 17 23 32 32 47 38 15 Other C… 9 7 11 13 13 14 18 16 Other F… 20 33 40 46 49 63 46 17 Other W… 5 2 3 4 2 7 3 18 Unaffil… 217 299 374 365 341 528 407 # … with 3 more variables: $100-150k <dbl>, >150k <dbl>, # Don't know/refused <dbl>This dataset contains three variables:

religion, stored in the rows,incomespread across the column names, andcountstored in the cell values.To tidy it we use